Gibbs sampling#

In this notebook, we show how to use CUQIpy to sample hierarchical Bayesian models using Gibbs sampling.

Gibbs sampling is a Markov chain Monte Carlo (MCMC) method for sampling a joint probability distribution of multiple random variables. Instead of sampling all variables simultaneously, Gibbs sampling samples the variables sequentially. The sampling of each variable is achieved by sampling from the conditional distribution of that variable given (fixed, previously sampled) values of the other variables.

Gibbs sampling is often an efficient way of sampling from a joint distribution if the conditional distributions are easy to sample from. On the other hand, if the conditional distributions are highly correlated and/or are difficult to sample from, then Gibbs sampling can be very inefficient. For these reasons, Gibbs sampling is often a double-edged sword that needs to be used in the right context.

Learning objectives#

Going through this notebook you will see how to:

Describe the basic idea of Gibbs sampling.

Define a hierarchical Bayesian model using CUQIpy.

Define a Gibbs sampling scheme in CUQIpy.

Run the Gibbs sampler and analyze the results.

Table of contents#

cuqi.experimental.mcmc module, which are expected to become the default soon. Check out the documentation for more details.

Setup#

We start by importing the necessary modules.

import numpy as np

import matplotlib.pyplot as plt

import cuqi

from cuqi.testproblem import Deconvolution1D

from cuqi.distribution import Gaussian, Gamma, JointDistribution, GMRF, LMRF

from cuqi.experimental.mcmc import HybridGibbs, LinearRTO, Conjugate, UGLA, ConjugateApprox, Direct

from cuqi.problem import BayesianProblem

1. The basics of Gibbs sampling #

To begin, let us consider a very simple Bayesian problem with two random variables, \(d\) and \(x\).

As we have already seen in the previous notebooks, we can define the distributions in CUQIpy as follows:

d = Gamma(1, 1)

x = Gaussian(0, cov=lambda d: 1/d)

Our goal is to sample from the joint distribution \(p(d, x)\). We can think of this as our target distribution.

Note In this contrived example, we can actually sample from the joint distribution directly. However, we will use this example to illustrate the basic idea of Gibbs sampling.

We define the joint distribution in CUQIpy as follows:

target = JointDistribution(x, d)

print(target)

JointDistribution(

Equation:

p(x,d) = p(x|d)p(d)

Densities:

x ~ CUQI Gaussian. Conditioning variables ['d'].

d ~ CUQI Gamma.

)

One idea for sampling \(p(d,x)\) is to define the parameter \(\boldsymbol{\theta} = [d; x]\) and sample from distribution \(p(\boldsymbol{\theta})\) at the same time. However, this is only possible for simple models, where the joint distribution is easy to sample from.

Gibbs sampling is an alternative approach, where we iteratively sample each variable from its conditional distribution, given the values of all other variables in the distribution. For this task with \(p(d,x)\), we would sample from \(p(d|x)\) and \(p(x|d)\) iteratively. We can summarize this procedure as repeating the following steps:

where \(x^{(i)}\) and \(d^{(i)}\) are the values of \(x\) and \(d\) at iteration \(i\). It can be shown that samples drawn from the above scheme will be distributed according to \(p(d, x)\).

1.1 Defining a sampling strategy#

When the conditional distributions are easy to sample from, Gibbs sampling can be very efficient. In the example above, we aim to sample from the following conditional distributions:

Sample \(x^{(i+1)}\) from \(p(x \mid d^{(i)})\),

Sample \(d^{(i+1)}\) from \(p(d \mid x^{(i+1)})\propto L(d\mid x^{(i+1)})p(d)\),

where \(L(d\mid x):=p(x\mid d)\) is the likelihood function with respect to \(d\).

For step 1: Note that we already know that \(p(x\mid d)=\mathrm{Gaussian}(0, d^{-1})\). Hence, we can directly sample from \(x \mid d\) by sampling from \(\mathrm{Gaussian}(0, d^{-1})\).

For step 2: For \(p(d\mid x)\) we could use any MCMC sampler to sample with. However, note that the Gaussian and Gamma distributions are conjugate, so we can recognize \(p(d\mid x)\) as a Gamma distribution:

where \(\alpha=1\) and \(\beta=1\) are the original parameters of the Gamma distribution for \(d\).

Instead of manually deriving the Gamma distribution for the conjugate posterior, CUQIpy provides a Conjugate sampler, which can be used to automatically sample from any (supported) conjugate distributions. Similarly we can use the Direct sampler to sample from the Gaussian distribution.

The interface and design of the Conjugate and Direct samplers is still under development and may change in the future.

In summary, we define a Gibbs sampling scheme in CUQIpy as follows:

# Define the sampling strategy

sampling_strategy = {

'x' : Direct(),

'd' : Conjugate(),

}

We can then define the Gibbs sampler and sample from the joint distribution as follows:

# Gibbs sampler

sampler = HybridGibbs(target, sampling_strategy)

# Sample

sampler.warmup(1000)

sampler.sample(1000)

# Get samples removing the burn-in

samples = sampler.get_samples().burnthin(1000)

Warmup: 0%| | 0/1000 [00:00<?, ?it/s]

Warmup: 11%|█ | 112/1000 [00:00<00:00, 1119.23it/s]

Warmup: 22%|██▏ | 224/1000 [00:00<00:00, 1117.62it/s]

Warmup: 34%|███▎ | 336/1000 [00:00<00:00, 1087.81it/s]

Warmup: 45%|████▍ | 446/1000 [00:00<00:00, 1092.01it/s]

Warmup: 56%|█████▌ | 559/1000 [00:00<00:00, 1104.56it/s]

Warmup: 67%|██████▋ | 670/1000 [00:00<00:00, 1085.13it/s]

Warmup: 78%|███████▊ | 782/1000 [00:00<00:00, 1096.30it/s]

Warmup: 90%|████████▉ | 896/1000 [00:00<00:00, 1107.60it/s]

Warmup: 100%|██████████| 1000/1000 [00:00<00:00, 1100.47it/s]

Sample: 0%| | 0/1000 [00:00<?, ?it/s]

Sample: 11%|█ | 111/1000 [00:00<00:00, 1107.76it/s]

Sample: 22%|██▏ | 224/1000 [00:00<00:00, 1117.35it/s]

Sample: 34%|███▍ | 340/1000 [00:00<00:00, 1134.22it/s]

Sample: 46%|████▌ | 456/1000 [00:00<00:00, 1141.96it/s]

Sample: 57%|█████▋ | 571/1000 [00:00<00:00, 1113.05it/s]

Sample: 68%|██████▊ | 685/1000 [00:00<00:00, 1120.83it/s]

Sample: 80%|████████ | 800/1000 [00:00<00:00, 1128.32it/s]

Sample: 91%|█████████▏| 913/1000 [00:00<00:00, 1108.61it/s]

Sample: 100%|██████████| 1000/1000 [00:00<00:00, 1118.75it/s]

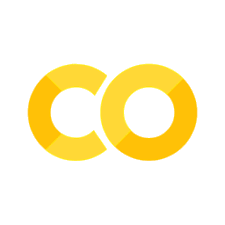

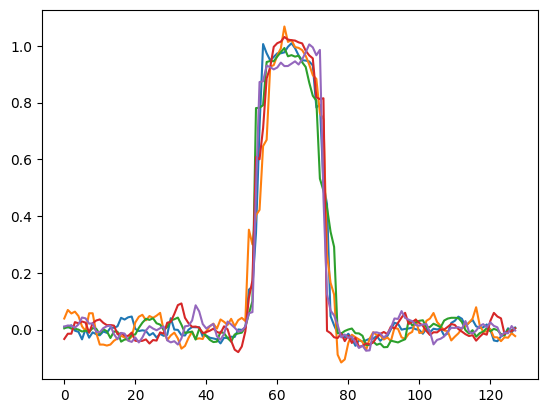

And we can visualize e.g. the trace plots as follows:

samples["x"].plot_trace()

samples["d"].plot_trace()

array([[<Axes: title={'center': 'd'}>, <Axes: title={'center': 'd'}>]],

dtype=object)

2. Gibbs for inverse problems #

The above example was a simple example to illustrate the basic idea of Gibbs sampling. However, often for these simple examples there exist more efficient sampling methods. In the following we instead focus on inverse problems where Gibbs sampling will prove to be useful, because efficient samplers are not readily available.”

2.1 Deterministic forward model and data#

Consider a Bayesian inverse problem

where \(\mathbf{A}: \mathbb{R}^n \to \mathbb{R}^m\) is the forward model of the inverse problem and \(\mathbf{y}\) and \(\mathbf{x}\) are random variables for the data and unknown parameter, respectively.

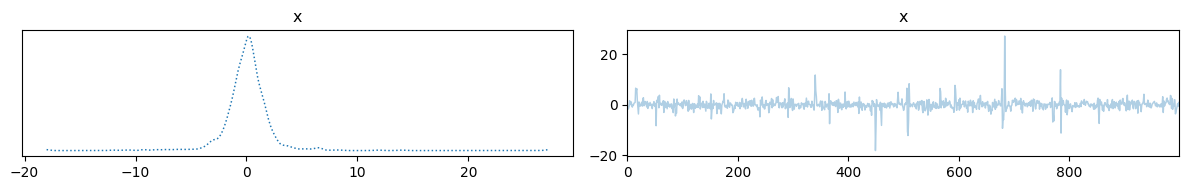

In this case, we assume that the forward model is a convolution operator. We can load this example from the testproblem library of CUQIpy and visualize the true solution (sharp signal) and data (convolved signal).

# Model and data

A, y_data, probinfo = Deconvolution1D(phantom='sinc', noise_std=0.005, PSF_param=6).get_components()

# Get dimension of signal

n = A.domain_dim

# Plot exact solution and observed data

plt.subplot(121)

probinfo.exactSolution.plot()

plt.title('Exact solution')

plt.subplot(122)

y_data.plot()

plt.title("Observed data")

# Print problem information

print(probinfo)

ProblemInfo with the following set attributes:

['infoString', 'exactData', 'exactSolution']

infoString: Noise type: Additive Gaussian with std: 0.005

2.2 Hierarchical Bayesian model#

We are now going to define a hierarchical Bayesian model for the inverse problem.

First, we are going to model the prior distribution of the parameter \(\mathbf{x}\) as a Gaussian Markov random field (GMRF), i.e.

This is a built-in distribution in CUQIpy, which models the difference between neighboring elements as a Gaussian, see GMRF for more details.

We do not know a good choice of precision parameter \(d\) for this prior, so we make use of lambda (anonymous) functions to make \(d\) a conditioning variable of the distribution. The distribution is defined in CUQIpy as follows.

# Define prior

x = GMRF(np.zeros(n), lambda d: d)

print(x)

CUQI GMRF. Conditioning variables ['d'].

★ Try yourself (optional):#

Try computing some samples from the GMRF distribution and visualize them. You can use the sample method of the GMRF distribution. You can also visualize the samples using the plot method of the obtained samples.

Hint: Since \(d\) is a conditioning variable, you need to specify the value of \(d\) when sampling.

# Your code here

The observed data is corrupted by additive Gaussian noise, so for random variable \(\mathbf{y}\) we have

where for this example, we pretend also not to know the noise precision \(s\).

Similar to the prior distribution, we can define this distribution as follows.

# Data distribution (likelihood)

y = Gaussian(A@x, cov=lambda s: 1/s)

print(y)

CUQI Gaussian. Conditioning variables ['x', 's'].

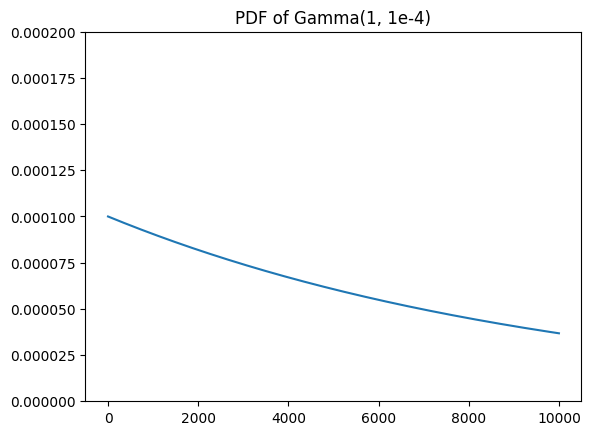

To model the two hyperparameters \(d\) and \(s\), we can use a weakly informative Gamma distribution, i.e. a distribution with a wide range of possible values.

d = Gamma(1, 1e-4)

s = Gamma(1, 1e-4)

print(d)

print(s)

CUQI Gamma.

CUQI Gamma.

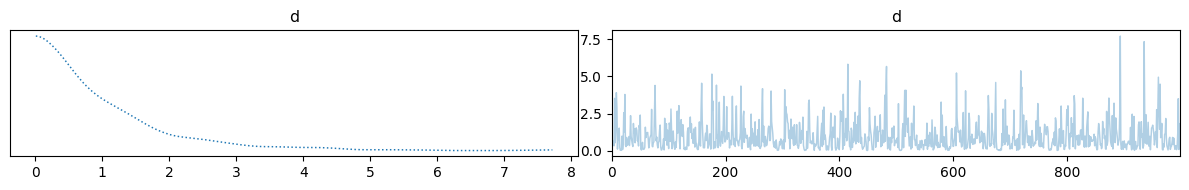

To see that the Gamma hyperprior is weakly informative, we can visualize the pdf of the Gamma distribution. Notice that the pdf is mostly flat for the range of values from \(0\) to \(10^4\). This means that the Gamma distribution is fairly uninformative for this range of values and assign equal probability to all values in this range.

grid = np.linspace(1e-4, 1e+4, 1000)

plt.plot(grid, [d.pdf(val) for val in grid])

plt.ylim(0, 2*1e-4)

plt.title("PDF of Gamma(1, 1e-4)");

In total, we can summarize the hierarchical Bayesian problem as follows (without explicitly writing the conditioning variables):

which in CUQIpy matches line-by-line:

d = Gamma(1, 1e-4)

s = Gamma(1, 1e-4)

x = GMRF(np.zeros(n), lambda d: d)

y = Gaussian(A@x, cov=lambda s: 1/s)

Posterior distribution#

We now define the posterior distribution by first creating the joint distribution \(p(\mathbf{y}, \mathbf{x}, d, s)\) and then conditioning on the observed data \(\mathbf{y}_\mathrm{data}\) to obtain the posterior which can be derived in density form as

This in done in CUQIpy as follows

# Create joint distribution

joint = JointDistribution(y, x, d, s)

# Define posterior by conditioning on the data

posterior = joint(y=y_data) # Automatically applies Bayes rule

# View the posterior

print(posterior)

JointDistribution(

Equation:

p(x,d,s|y) ∝ L(x,s|y)p(x|d)p(d)p(s)

Densities:

y ~ CUQI Gaussian Likelihood function. Parameters ['x', 's'].

x ~ CUQI GMRF. Conditioning variables ['d'].

d ~ CUQI Gamma.

s ~ CUQI Gamma.

)

Notice that after conditioning on the data, the distribution associated with \(\mathbf{y}\) became a likelihood function and that the posterior is now a joint distribution of the variables \(\mathbf{x}\), \(d\) and \(s\). It matches the mathematical expression above.

★ Try yourself (optional):#

Try evaluating the un-normalized log probability density (logd) of the posterior distribution \(p(\mathbf{x}, d, s \mid \mathbf{y}_\mathrm{data})\) at some points \(\mathbf{x}^*\in \mathbb{R}^n\), \(d^*\in \mathbb{R}_+\) and \(s^*\in \mathbb{R}_+\). Hint: The

logdmethod of theJointdistribution takes comma-separated keyword arguments for the conditioning variables. For example,logd(x=xstar, d=dstar, s=sstar).You can also try conditioning the posterior distribution on some of the variables, e.g.

posterior(x=xstar, d=dstar). This should compute the marginal distribution of \(s\) given \(\mathbf{x}^*\) and \(d^*\), i.e. \(p(s \mid \mathbf{y}_\mathrm{data}, \mathbf{x}^*, d^*)\).Experiment with sampling from a similar BIP that does not include hyperparameters \(d\) and \(s\) (use the values \(d=1\) and \(s=10000\) to determine the precision of the prior and the likelihood). You can create the BIP as follows. Hint: use the

UQmethod of theBayesianProblemto sample from the posterior distribution.

x2 = GMRF(np.zeros(n), prec=1)

y2 = Gaussian(A@x2, prec=10000)

BP2 = BayesianProblem(x2, y2)

BP2.set_data(y2=y_data)

# Your code here

2.3 Gibbs Sampler#

The hierarchical Bayesian problem above has some important properties that we can exploit to make the sampling more efficient.

First, note that for the pair of a Gaussian distribution with a Gamma distribution for the precision is a conjugate pair (as we also saw earlier). This means that the posterior distribution with respect to the parameter for the precision is also a Gamma distribution. This means that we can efficiently sample from \(d\) and \(s\) conditional on the other variables by exploiting conjugacy.

Second, note that the prior distribution of \(\mathbf{x}\) is

a Gaussian Markov random field (GMRF) and that the distribution for

\(\mathbf{y}\) is also Gaussian with a Linear operator acting

on \(\mathbf{x}\) as the mean variable. This means that we can

efficiently sample from \(\mathbf{x}\) conditional on the other

variables using the LinearRTO sampler.

Taking these two facts into account, we can define a Gibbs sampler

that uses the Conjugate sampler for \(d\) and \(s\) and

the LinearRTO sampler for \(\mathbf{x}\).

This is done in CUQIpy as follows:

# Define sampling strategy

sampling_strategy = {

'x': LinearRTO(),

'd': Conjugate(),

's': Conjugate()

}

# Define Gibbs sampler

sampler = HybridGibbs(posterior, sampling_strategy)

# Run sampler

sampler.warmup(200)

sampler.sample(1000)

# Get samples removing the burn-in

samples = sampler.get_samples().burnthin(200)

Warmup: 0%| | 0/200 [00:00<?, ?it/s]

Warmup: 8%|▊ | 17/200 [00:00<00:01, 160.22it/s]

Warmup: 17%|█▋ | 34/200 [00:00<00:01, 161.67it/s]

Warmup: 26%|██▌ | 51/200 [00:00<00:00, 163.52it/s]

Warmup: 34%|███▍ | 68/200 [00:00<00:00, 164.57it/s]

Warmup: 42%|████▎ | 85/200 [00:00<00:00, 165.76it/s]

Warmup: 51%|█████ | 102/200 [00:00<00:00, 166.18it/s]

Warmup: 60%|█████▉ | 119/200 [00:00<00:00, 164.86it/s]

Warmup: 68%|██████▊ | 136/200 [00:00<00:00, 165.83it/s]

Warmup: 76%|███████▋ | 153/200 [00:00<00:00, 166.40it/s]

Warmup: 85%|████████▌ | 170/200 [00:01<00:00, 166.99it/s]

Warmup: 94%|█████████▎| 187/200 [00:01<00:00, 167.49it/s]

Warmup: 100%|██████████| 200/200 [00:01<00:00, 165.95it/s]

Sample: 0%| | 0/1000 [00:00<?, ?it/s]

Sample: 2%|▏ | 17/1000 [00:00<00:05, 167.70it/s]

Sample: 3%|▎ | 34/1000 [00:00<00:05, 167.67it/s]

Sample: 5%|▌ | 51/1000 [00:00<00:05, 167.67it/s]

Sample: 7%|▋ | 68/1000 [00:00<00:05, 166.12it/s]

Sample: 8%|▊ | 85/1000 [00:00<00:05, 166.38it/s]

Sample: 10%|█ | 102/1000 [00:00<00:05, 166.76it/s]

Sample: 12%|█▏ | 119/1000 [00:00<00:05, 166.96it/s]

Sample: 14%|█▎ | 137/1000 [00:00<00:05, 167.98it/s]

Sample: 15%|█▌ | 154/1000 [00:00<00:05, 168.49it/s]

Sample: 17%|█▋ | 171/1000 [00:01<00:04, 168.77it/s]

Sample: 19%|█▉ | 188/1000 [00:01<00:04, 169.05it/s]

Sample: 21%|██ | 206/1000 [00:01<00:04, 169.47it/s]

Sample: 22%|██▏ | 223/1000 [00:01<00:04, 169.31it/s]

Sample: 24%|██▍ | 241/1000 [00:01<00:04, 169.54it/s]

Sample: 26%|██▌ | 258/1000 [00:01<00:04, 168.90it/s]

Sample: 28%|██▊ | 275/1000 [00:01<00:04, 168.39it/s]

Sample: 29%|██▉ | 292/1000 [00:01<00:04, 167.96it/s]

Sample: 31%|███ | 310/1000 [00:01<00:04, 168.91it/s]

Sample: 33%|███▎ | 328/1000 [00:01<00:03, 169.48it/s]

Sample: 34%|███▍ | 345/1000 [00:02<00:03, 169.23it/s]

Sample: 36%|███▌ | 362/1000 [00:02<00:03, 169.37it/s]

Sample: 38%|███▊ | 379/1000 [00:02<00:03, 169.37it/s]

Sample: 40%|███▉ | 396/1000 [00:02<00:03, 169.32it/s]

Sample: 41%|████▏ | 413/1000 [00:02<00:03, 168.91it/s]

Sample: 43%|████▎ | 430/1000 [00:02<00:03, 168.72it/s]

Sample: 45%|████▍ | 447/1000 [00:02<00:03, 168.75it/s]

Sample: 46%|████▋ | 464/1000 [00:02<00:03, 169.11it/s]

Sample: 48%|████▊ | 481/1000 [00:02<00:03, 169.25it/s]

Sample: 50%|████▉ | 498/1000 [00:02<00:02, 168.53it/s]

Sample: 52%|█████▏ | 515/1000 [00:03<00:02, 168.63it/s]

Sample: 53%|█████▎ | 533/1000 [00:03<00:02, 169.15it/s]

Sample: 55%|█████▌ | 551/1000 [00:03<00:02, 168.78it/s]

Sample: 57%|█████▋ | 568/1000 [00:03<00:02, 168.93it/s]

Sample: 59%|█████▊ | 586/1000 [00:03<00:02, 169.65it/s]

Sample: 60%|██████ | 604/1000 [00:03<00:02, 169.95it/s]

Sample: 62%|██████▏ | 622/1000 [00:03<00:02, 170.04it/s]

Sample: 64%|██████▍ | 640/1000 [00:03<00:02, 170.10it/s]

Sample: 66%|██████▌ | 658/1000 [00:03<00:02, 170.06it/s]

Sample: 68%|██████▊ | 676/1000 [00:04<00:01, 169.84it/s]

Sample: 69%|██████▉ | 694/1000 [00:04<00:01, 170.18it/s]

Sample: 71%|███████ | 712/1000 [00:04<00:01, 170.05it/s]

Sample: 73%|███████▎ | 730/1000 [00:04<00:01, 169.82it/s]

Sample: 75%|███████▍ | 747/1000 [00:04<00:01, 168.04it/s]

Sample: 76%|███████▋ | 764/1000 [00:04<00:01, 168.29it/s]

Sample: 78%|███████▊ | 782/1000 [00:04<00:01, 168.92it/s]

Sample: 80%|████████ | 800/1000 [00:04<00:01, 169.50it/s]

Sample: 82%|████████▏ | 818/1000 [00:04<00:01, 169.76it/s]

Sample: 84%|████████▎ | 836/1000 [00:04<00:00, 169.98it/s]

Sample: 85%|████████▌ | 853/1000 [00:05<00:00, 169.81it/s]

Sample: 87%|████████▋ | 870/1000 [00:05<00:00, 169.48it/s]

Sample: 89%|████████▊ | 887/1000 [00:05<00:00, 169.23it/s]

Sample: 90%|█████████ | 904/1000 [00:05<00:00, 169.42it/s]

Sample: 92%|█████████▏| 922/1000 [00:05<00:00, 170.09it/s]

Sample: 94%|█████████▍| 940/1000 [00:05<00:00, 170.49it/s]

Sample: 96%|█████████▌| 958/1000 [00:05<00:00, 170.21it/s]

Sample: 98%|█████████▊| 976/1000 [00:05<00:00, 169.50it/s]

Sample: 99%|█████████▉| 994/1000 [00:05<00:00, 170.06it/s]

Sample: 100%|██████████| 1000/1000 [00:05<00:00, 167.10it/s]

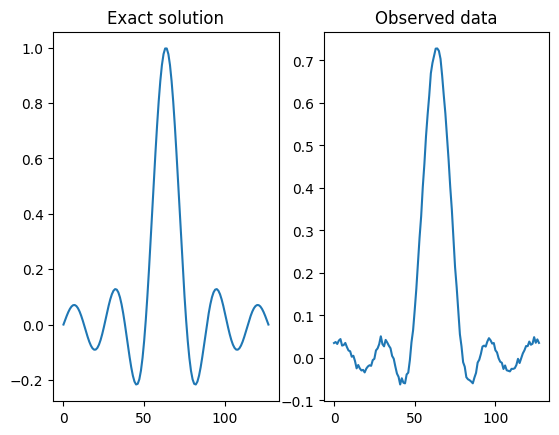

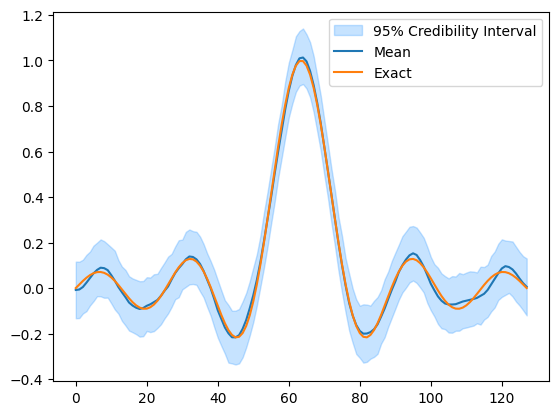

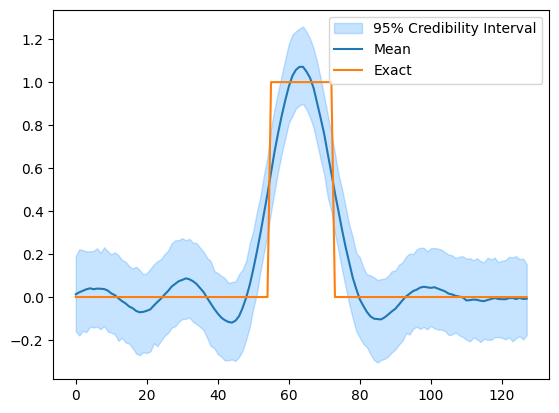

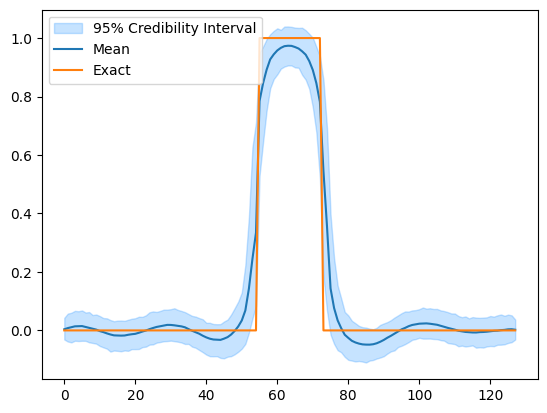

2.4 Analyze results#

After sampling we can inspect the results. The samples are stored as a dictionary with the variable names as keys. Samples for each variable is stored as a CUQIpy Samples object which contains the many convenience methods for diagnostics and plotting of MCMC samples.

# Plot credible intervals for x

samples['x'].plot_ci(exact=probinfo.exactSolution)

[<matplotlib.lines.Line2D at 0x7f39bdc96fe0>,

<matplotlib.lines.Line2D at 0x7f39bdca4bb0>,

<matplotlib.collections.PolyCollection at 0x7f39bdc95060>]

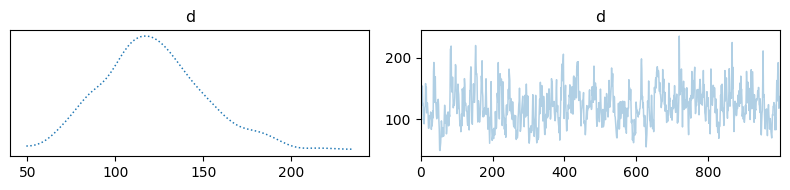

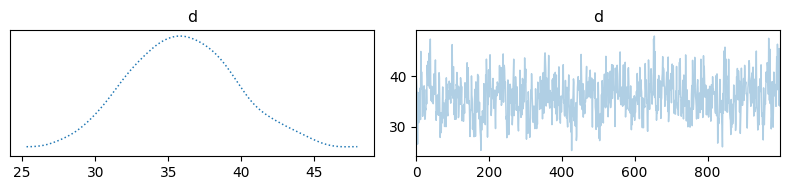

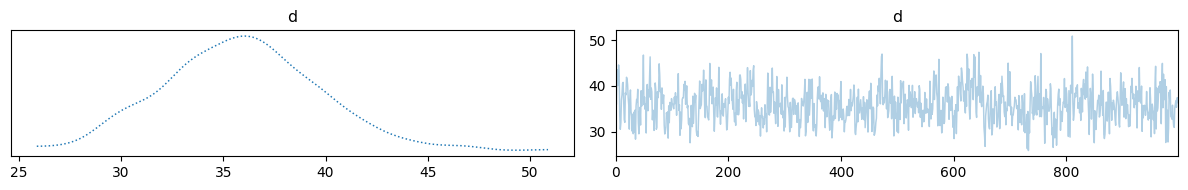

Trace plot for d

samples['d'].plot_trace(figsize=(8,2))

array([[<Axes: title={'center': 'd'}>, <Axes: title={'center': 'd'}>]],

dtype=object)

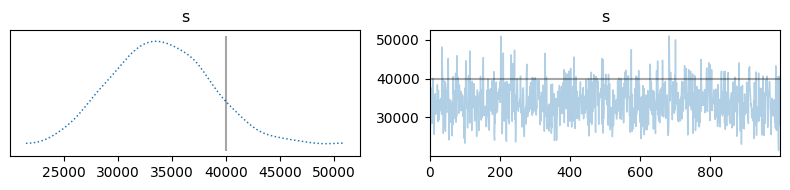

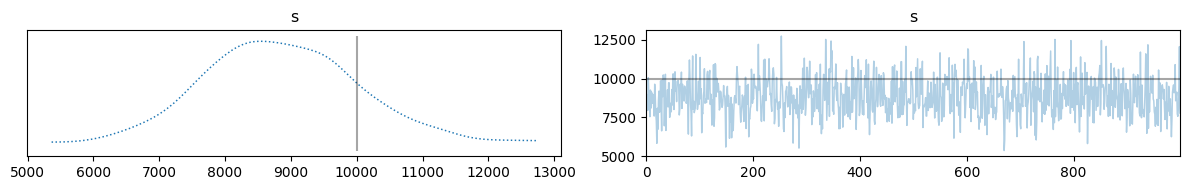

Trace plot for s

samples['s'].plot_trace(figsize=(8,2), exact=1/0.005**2)

array([[<Axes: title={'center': 's'}>, <Axes: title={'center': 's'}>]],

dtype=object)

Notice that the true noise standard deviation was \(0.005\) which translates to a precision of \(s=40000\). The trace plot for \(s\) shows that the sampler has converged close to the true value.

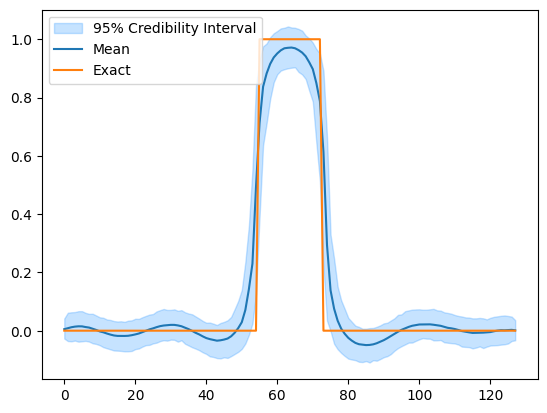

3 Exploring other priors #

Suppose the true solution was not modelled well by a GMRF prior. This would for example be the case for a piece-wise constant “square” signal. We can quickly try that experiment by generating new data from the test problem with a square phantom.

For illustration we repeat all the code steps from above in a single cell.

# Deterministic forward model + data

A, y_data, probinfo = Deconvolution1D(phantom='square').get_components()

# Bayesian model

d = Gamma(1, 1e-4)

s = Gamma(1, 1e-4)

x = GMRF(np.zeros(n), lambda d: d)

y = Gaussian(A@x, cov=lambda s: 1/s)

# Joint distribution

joint = JointDistribution(y, x, d, s)

# Posterior

posterior = joint(y=y_data)

# Sampling strategy

sampling_strategy = {

'x': LinearRTO(),

'd': Conjugate(),

's': Conjugate()

}

# Gibbs sampler

sampler = HybridGibbs(posterior, sampling_strategy)

# Run sampler

sampler.warmup(200)

sampler.sample(1000)

# Get samples removing the burn-in

samples = sampler.get_samples().burnthin(200)

# Plot credible intervals for x

samples['x'].plot_ci(exact=probinfo.exactSolution)

Warmup: 0%| | 0/200 [00:00<?, ?it/s]

Warmup: 9%|▉ | 18/200 [00:00<00:01, 169.38it/s]

Warmup: 18%|█▊ | 35/200 [00:00<00:00, 168.98it/s]

Warmup: 26%|██▋ | 53/200 [00:00<00:00, 169.47it/s]

Warmup: 36%|███▌ | 71/200 [00:00<00:00, 169.86it/s]

Warmup: 44%|████▍ | 88/200 [00:00<00:00, 169.58it/s]

Warmup: 53%|█████▎ | 106/200 [00:00<00:00, 169.90it/s]

Warmup: 62%|██████▏ | 123/200 [00:00<00:00, 169.81it/s]

Warmup: 70%|███████ | 141/200 [00:00<00:00, 169.87it/s]

Warmup: 79%|███████▉ | 158/200 [00:00<00:00, 168.98it/s]

Warmup: 88%|████████▊ | 176/200 [00:01<00:00, 169.81it/s]

Warmup: 96%|█████████▋| 193/200 [00:01<00:00, 169.53it/s]

Warmup: 100%|██████████| 200/200 [00:01<00:00, 169.52it/s]

Sample: 0%| | 0/1000 [00:00<?, ?it/s]

Sample: 2%|▏ | 18/1000 [00:00<00:05, 171.68it/s]

Sample: 4%|▎ | 36/1000 [00:00<00:05, 169.39it/s]

Sample: 5%|▌ | 53/1000 [00:00<00:05, 168.55it/s]

Sample: 7%|▋ | 70/1000 [00:00<00:05, 167.69it/s]

Sample: 9%|▊ | 87/1000 [00:00<00:05, 167.59it/s]

Sample: 10%|█ | 104/1000 [00:00<00:05, 167.66it/s]

Sample: 12%|█▏ | 121/1000 [00:00<00:05, 167.84it/s]

Sample: 14%|█▍ | 138/1000 [00:00<00:05, 167.95it/s]

Sample: 16%|█▌ | 155/1000 [00:00<00:05, 168.53it/s]

Sample: 17%|█▋ | 172/1000 [00:01<00:04, 168.64it/s]

Sample: 19%|█▉ | 190/1000 [00:01<00:04, 169.11it/s]

Sample: 21%|██ | 208/1000 [00:01<00:04, 169.58it/s]

Sample: 23%|██▎ | 226/1000 [00:01<00:04, 169.86it/s]

Sample: 24%|██▍ | 244/1000 [00:01<00:04, 170.03it/s]

Sample: 26%|██▌ | 262/1000 [00:01<00:04, 170.39it/s]

Sample: 28%|██▊ | 280/1000 [00:01<00:04, 170.32it/s]

Sample: 30%|██▉ | 298/1000 [00:01<00:04, 169.89it/s]

Sample: 32%|███▏ | 315/1000 [00:01<00:04, 169.50it/s]

Sample: 33%|███▎ | 332/1000 [00:01<00:03, 168.59it/s]

Sample: 35%|███▍ | 349/1000 [00:02<00:03, 168.62it/s]

Sample: 37%|███▋ | 366/1000 [00:02<00:03, 168.52it/s]

Sample: 38%|███▊ | 384/1000 [00:02<00:03, 168.87it/s]

Sample: 40%|████ | 402/1000 [00:02<00:03, 169.48it/s]

Sample: 42%|████▏ | 420/1000 [00:02<00:03, 169.91it/s]

Sample: 44%|████▍ | 438/1000 [00:02<00:03, 170.21it/s]

Sample: 46%|████▌ | 456/1000 [00:02<00:03, 170.51it/s]

Sample: 47%|████▋ | 474/1000 [00:02<00:03, 169.47it/s]

Sample: 49%|████▉ | 492/1000 [00:02<00:02, 169.70it/s]

Sample: 51%|█████ | 509/1000 [00:03<00:02, 169.78it/s]

Sample: 53%|█████▎ | 527/1000 [00:03<00:02, 170.44it/s]

Sample: 55%|█████▍ | 545/1000 [00:03<00:02, 170.27it/s]

Sample: 56%|█████▋ | 563/1000 [00:03<00:02, 170.03it/s]

Sample: 58%|█████▊ | 581/1000 [00:03<00:02, 169.47it/s]

Sample: 60%|█████▉ | 598/1000 [00:03<00:02, 169.61it/s]

Sample: 62%|██████▏ | 615/1000 [00:03<00:02, 169.34it/s]

Sample: 63%|██████▎ | 632/1000 [00:03<00:02, 169.38it/s]

Sample: 65%|██████▍ | 649/1000 [00:03<00:02, 169.33it/s]

Sample: 67%|██████▋ | 666/1000 [00:03<00:01, 169.33it/s]

Sample: 68%|██████▊ | 683/1000 [00:04<00:01, 168.99it/s]

Sample: 70%|███████ | 701/1000 [00:04<00:01, 169.24it/s]

Sample: 72%|███████▏ | 718/1000 [00:04<00:01, 169.44it/s]

Sample: 74%|███████▎ | 735/1000 [00:04<00:01, 169.55it/s]

Sample: 75%|███████▌ | 753/1000 [00:04<00:01, 169.69it/s]

Sample: 77%|███████▋ | 771/1000 [00:04<00:01, 170.04it/s]

Sample: 79%|███████▉ | 789/1000 [00:04<00:01, 169.86it/s]

Sample: 81%|████████ | 806/1000 [00:04<00:01, 169.13it/s]

Sample: 82%|████████▏ | 823/1000 [00:04<00:01, 169.30it/s]

Sample: 84%|████████▍ | 840/1000 [00:04<00:00, 169.05it/s]

Sample: 86%|████████▌ | 857/1000 [00:05<00:00, 168.26it/s]

Sample: 87%|████████▋ | 874/1000 [00:05<00:00, 168.63it/s]

Sample: 89%|████████▉ | 892/1000 [00:05<00:00, 169.03it/s]

Sample: 91%|█████████ | 909/1000 [00:05<00:00, 169.08it/s]

Sample: 93%|█████████▎| 926/1000 [00:05<00:00, 169.11it/s]

Sample: 94%|█████████▍| 944/1000 [00:05<00:00, 169.70it/s]

Sample: 96%|█████████▌| 962/1000 [00:05<00:00, 170.19it/s]

Sample: 98%|█████████▊| 980/1000 [00:05<00:00, 170.26it/s]

Sample: 100%|█████████▉| 998/1000 [00:05<00:00, 170.48it/s]

Sample: 100%|██████████| 1000/1000 [00:05<00:00, 169.38it/s]

[<matplotlib.lines.Line2D at 0x7f39bdbaa650>,

<matplotlib.lines.Line2D at 0x7f39bdbaa710>,

<matplotlib.collections.PolyCollection at 0x7f39bdba9e70>]

Notice that while the sampling still ran, the posterior distribution does not match the characteristics of the square phantom. We can improve this result by switching to a prior that better matches this kind of signal.

One choice is the LMRF prior, which assumes a Laplace distribution for the differences between neighboring elements of \(\mathbf{x}\) instead of a Gaussian. That is we assume

which translates to the assumption that \(x_i-x_{i-1} \sim \mathrm{Laplace}(0, d^{-1})\) for \(i=1, \ldots, n\) (excluding boundaries)

This prior is implemented in CUQIpy as the LMRF distribution.

To update our model we simply need to replace the GMRF distribution

with the LMRF distribution. Note that the Laplace distribution

is defined via a scale parameter, so we invert the parameter \(d\).

This laplace distribution and new posterior can be defined as follows:

# Define new distribution for x

x = LMRF(np.zeros(n), lambda d: 1/d)

# Define new joint distribution with piecewise constant prior

joint_Ld = JointDistribution(y, x, d, s)

# Define new posterior by conditioning on the data

posterior_Ld = joint_Ld(y=y_data)

print(posterior_Ld)

JointDistribution(

Equation:

p(x,d,s|y) ∝ L(x,s|y)p(x|d)p(d)p(s)

Densities:

y ~ CUQI Gaussian Likelihood function. Parameters ['x', 's'].

x ~ CUQI LMRF. Conditioning variables ['d'].

d ~ CUQI Gamma.

s ~ CUQI Gamma.

)

3.1 Gibbs Sampler (with Laplace prior)#

Using the same approach as ealier we can define a Gibbs sampler

for this new hierarchical model. The only difference is that we

now need to use a different sampler for \(\mathbf{x}\) because

the LinearRTO sampler only works for Gaussian distributions.

In this case we use the UGLA sampler for \(\mathbf{x}\). We also use an approximate Conjugate sampler for \(d\) which approximately samples from the posterior distribution of \(d\) conditional on the other variables in an efficient manner. For more details see e.g. Uribe et al. (2021).

# Define sampling strategy

sampling_strategy = {

'x': UGLA(),

'd': ConjugateApprox(),

's': Conjugate()

}

# Define Gibbs sampler

sampler_Ld = HybridGibbs(posterior_Ld, sampling_strategy)

# Run sampler

sampler_Ld.warmup(200)

sampler_Ld.sample(1000)

# Get samples removing the burn-in

samples_Ld = sampler_Ld.get_samples().burnthin(200)

Warmup: 0%| | 0/200 [00:00<?, ?it/s]

Warmup: 3%|▎ | 6/200 [00:00<00:03, 50.49it/s]

Warmup: 6%|▌ | 12/200 [00:00<00:03, 49.47it/s]

Warmup: 8%|▊ | 17/200 [00:00<00:03, 49.25it/s]

Warmup: 11%|█ | 22/200 [00:00<00:03, 49.04it/s]

Warmup: 14%|█▎ | 27/200 [00:00<00:03, 48.88it/s]

Warmup: 16%|█▌ | 32/200 [00:00<00:03, 48.80it/s]

Warmup: 18%|█▊ | 37/200 [00:00<00:03, 48.87it/s]

Warmup: 21%|██ | 42/200 [00:00<00:03, 48.72it/s]

Warmup: 24%|██▎ | 47/200 [00:00<00:03, 48.54it/s]

Warmup: 26%|██▌ | 52/200 [00:01<00:03, 48.59it/s]

Warmup: 28%|██▊ | 57/200 [00:01<00:02, 48.44it/s]

Warmup: 31%|███ | 62/200 [00:01<00:02, 48.46it/s]

Warmup: 34%|███▎ | 67/200 [00:01<00:02, 48.56it/s]

Warmup: 36%|███▌ | 72/200 [00:01<00:02, 48.59it/s]

Warmup: 38%|███▊ | 77/200 [00:01<00:02, 48.64it/s]

Warmup: 41%|████ | 82/200 [00:01<00:02, 48.75it/s]

Warmup: 44%|████▎ | 87/200 [00:01<00:02, 48.82it/s]

Warmup: 46%|████▌ | 92/200 [00:01<00:02, 48.87it/s]

Warmup: 48%|████▊ | 97/200 [00:01<00:02, 48.01it/s]

Warmup: 51%|█████ | 102/200 [00:02<00:02, 48.44it/s]

Warmup: 54%|█████▎ | 107/200 [00:02<00:01, 48.77it/s]

Warmup: 56%|█████▌ | 112/200 [00:02<00:01, 48.75it/s]

Warmup: 58%|█████▊ | 117/200 [00:02<00:01, 48.94it/s]

Warmup: 61%|██████ | 122/200 [00:02<00:01, 48.93it/s]

Warmup: 64%|██████▎ | 127/200 [00:02<00:01, 48.75it/s]

Warmup: 66%|██████▌ | 132/200 [00:02<00:01, 48.32it/s]

Warmup: 68%|██████▊ | 137/200 [00:02<00:01, 48.50it/s]

Warmup: 71%|███████ | 142/200 [00:02<00:01, 48.62it/s]

Warmup: 74%|███████▎ | 147/200 [00:03<00:01, 48.75it/s]

Warmup: 76%|███████▌ | 152/200 [00:03<00:00, 48.80it/s]

Warmup: 78%|███████▊ | 157/200 [00:03<00:00, 48.96it/s]

Warmup: 81%|████████ | 162/200 [00:03<00:00, 49.18it/s]

Warmup: 84%|████████▎ | 167/200 [00:03<00:00, 49.14it/s]

Warmup: 86%|████████▌ | 172/200 [00:03<00:00, 49.04it/s]

Warmup: 88%|████████▊ | 177/200 [00:03<00:00, 48.84it/s]

Warmup: 91%|█████████ | 182/200 [00:03<00:00, 49.05it/s]

Warmup: 94%|█████████▎| 187/200 [00:03<00:00, 49.10it/s]

Warmup: 96%|█████████▌| 192/200 [00:03<00:00, 48.86it/s]

Warmup: 98%|█████████▊| 197/200 [00:04<00:00, 48.65it/s]

Warmup: 100%|██████████| 200/200 [00:04<00:00, 48.78it/s]

Sample: 0%| | 0/1000 [00:00<?, ?it/s]

Sample: 0%| | 5/1000 [00:00<00:20, 49.18it/s]

Sample: 1%| | 10/1000 [00:00<00:20, 48.97it/s]

Sample: 2%|▏ | 15/1000 [00:00<00:20, 49.01it/s]

Sample: 2%|▏ | 20/1000 [00:00<00:19, 49.11it/s]

Sample: 2%|▎ | 25/1000 [00:00<00:19, 48.97it/s]

Sample: 3%|▎ | 30/1000 [00:00<00:20, 48.22it/s]

Sample: 4%|▎ | 35/1000 [00:00<00:19, 48.39it/s]

Sample: 4%|▍ | 40/1000 [00:00<00:19, 48.26it/s]

Sample: 4%|▍ | 45/1000 [00:00<00:19, 48.20it/s]

Sample: 5%|▌ | 50/1000 [00:01<00:19, 48.40it/s]

Sample: 6%|▌ | 55/1000 [00:01<00:19, 48.43it/s]

Sample: 6%|▌ | 60/1000 [00:01<00:19, 48.46it/s]

Sample: 6%|▋ | 65/1000 [00:01<00:19, 48.45it/s]

Sample: 7%|▋ | 70/1000 [00:01<00:19, 48.42it/s]

Sample: 8%|▊ | 75/1000 [00:01<00:19, 48.36it/s]

Sample: 8%|▊ | 80/1000 [00:01<00:18, 48.46it/s]

Sample: 8%|▊ | 85/1000 [00:01<00:18, 48.54it/s]

Sample: 9%|▉ | 90/1000 [00:01<00:18, 48.62it/s]

Sample: 10%|▉ | 95/1000 [00:01<00:18, 48.60it/s]

Sample: 10%|█ | 100/1000 [00:02<00:18, 48.79it/s]

Sample: 10%|█ | 105/1000 [00:02<00:18, 48.70it/s]

Sample: 11%|█ | 110/1000 [00:02<00:18, 48.84it/s]

Sample: 12%|█▏ | 115/1000 [00:02<00:18, 48.73it/s]

Sample: 12%|█▏ | 120/1000 [00:02<00:18, 48.67it/s]

Sample: 12%|█▎ | 125/1000 [00:02<00:17, 48.70it/s]

Sample: 13%|█▎ | 130/1000 [00:02<00:17, 48.88it/s]

Sample: 14%|█▎ | 135/1000 [00:02<00:17, 49.07it/s]

Sample: 14%|█▍ | 140/1000 [00:02<00:17, 49.24it/s]

Sample: 14%|█▍ | 145/1000 [00:02<00:17, 49.29it/s]

Sample: 15%|█▌ | 150/1000 [00:03<00:17, 49.09it/s]

Sample: 16%|█▌ | 155/1000 [00:03<00:17, 48.76it/s]

Sample: 16%|█▌ | 160/1000 [00:03<00:17, 48.38it/s]

Sample: 16%|█▋ | 165/1000 [00:03<00:17, 48.46it/s]

Sample: 17%|█▋ | 170/1000 [00:03<00:17, 48.56it/s]

Sample: 18%|█▊ | 175/1000 [00:03<00:16, 48.79it/s]

Sample: 18%|█▊ | 180/1000 [00:03<00:16, 48.66it/s]

Sample: 18%|█▊ | 185/1000 [00:03<00:16, 48.38it/s]

Sample: 19%|█▉ | 190/1000 [00:03<00:16, 48.30it/s]

Sample: 20%|█▉ | 195/1000 [00:04<00:16, 48.27it/s]

Sample: 20%|██ | 200/1000 [00:04<00:16, 48.29it/s]

Sample: 20%|██ | 205/1000 [00:04<00:16, 48.28it/s]

Sample: 21%|██ | 210/1000 [00:04<00:16, 48.32it/s]

Sample: 22%|██▏ | 215/1000 [00:04<00:16, 48.47it/s]

Sample: 22%|██▏ | 220/1000 [00:04<00:16, 48.36it/s]

Sample: 22%|██▎ | 225/1000 [00:04<00:15, 48.44it/s]

Sample: 23%|██▎ | 230/1000 [00:04<00:15, 48.39it/s]

Sample: 24%|██▎ | 235/1000 [00:04<00:15, 48.38it/s]

Sample: 24%|██▍ | 240/1000 [00:04<00:15, 48.38it/s]

Sample: 24%|██▍ | 245/1000 [00:05<00:15, 48.50it/s]

Sample: 25%|██▌ | 250/1000 [00:05<00:15, 48.45it/s]

Sample: 26%|██▌ | 255/1000 [00:05<00:15, 48.27it/s]

Sample: 26%|██▌ | 260/1000 [00:05<00:15, 48.35it/s]

Sample: 26%|██▋ | 265/1000 [00:05<00:15, 48.27it/s]

Sample: 27%|██▋ | 270/1000 [00:05<00:15, 48.19it/s]

Sample: 28%|██▊ | 275/1000 [00:05<00:15, 48.24it/s]

Sample: 28%|██▊ | 280/1000 [00:05<00:14, 48.30it/s]

Sample: 28%|██▊ | 285/1000 [00:05<00:14, 48.10it/s]

Sample: 29%|██▉ | 290/1000 [00:05<00:14, 48.03it/s]

Sample: 30%|██▉ | 295/1000 [00:06<00:14, 48.30it/s]

Sample: 30%|███ | 300/1000 [00:06<00:14, 48.38it/s]

Sample: 30%|███ | 305/1000 [00:06<00:14, 48.66it/s]

Sample: 31%|███ | 310/1000 [00:06<00:14, 49.04it/s]

Sample: 32%|███▏ | 315/1000 [00:06<00:13, 49.30it/s]

Sample: 32%|███▏ | 320/1000 [00:06<00:13, 49.15it/s]

Sample: 32%|███▎ | 325/1000 [00:06<00:13, 49.39it/s]

Sample: 33%|███▎ | 330/1000 [00:06<00:13, 49.48it/s]

Sample: 34%|███▎ | 335/1000 [00:06<00:13, 49.24it/s]

Sample: 34%|███▍ | 340/1000 [00:06<00:13, 49.29it/s]

Sample: 34%|███▍ | 345/1000 [00:07<00:13, 49.10it/s]

Sample: 35%|███▌ | 350/1000 [00:07<00:13, 48.97it/s]

Sample: 36%|███▌ | 355/1000 [00:07<00:13, 48.99it/s]

Sample: 36%|███▌ | 360/1000 [00:07<00:13, 48.60it/s]

Sample: 36%|███▋ | 365/1000 [00:07<00:13, 48.36it/s]

Sample: 37%|███▋ | 370/1000 [00:07<00:12, 48.67it/s]

Sample: 38%|███▊ | 375/1000 [00:07<00:12, 49.06it/s]

Sample: 38%|███▊ | 380/1000 [00:07<00:12, 49.26it/s]

Sample: 38%|███▊ | 385/1000 [00:07<00:12, 49.18it/s]

Sample: 39%|███▉ | 390/1000 [00:08<00:12, 49.36it/s]

Sample: 40%|███▉ | 395/1000 [00:08<00:12, 49.22it/s]

Sample: 40%|████ | 400/1000 [00:08<00:12, 49.01it/s]

Sample: 40%|████ | 405/1000 [00:08<00:12, 49.12it/s]

Sample: 41%|████ | 410/1000 [00:08<00:11, 49.25it/s]

Sample: 42%|████▏ | 415/1000 [00:08<00:11, 49.23it/s]

Sample: 42%|████▏ | 420/1000 [00:08<00:11, 48.94it/s]

Sample: 42%|████▎ | 425/1000 [00:08<00:11, 48.80it/s]

Sample: 43%|████▎ | 430/1000 [00:08<00:11, 49.08it/s]

Sample: 44%|████▎ | 435/1000 [00:08<00:11, 48.99it/s]

Sample: 44%|████▍ | 440/1000 [00:09<00:11, 49.08it/s]

Sample: 44%|████▍ | 445/1000 [00:09<00:11, 49.16it/s]

Sample: 45%|████▌ | 450/1000 [00:09<00:11, 49.28it/s]

Sample: 46%|████▌ | 455/1000 [00:09<00:11, 49.32it/s]

Sample: 46%|████▌ | 461/1000 [00:09<00:10, 49.52it/s]

Sample: 47%|████▋ | 466/1000 [00:09<00:10, 49.52it/s]

Sample: 47%|████▋ | 471/1000 [00:09<00:10, 49.63it/s]

Sample: 48%|████▊ | 476/1000 [00:09<00:10, 49.62it/s]

Sample: 48%|████▊ | 481/1000 [00:09<00:10, 49.64it/s]

Sample: 49%|████▊ | 486/1000 [00:09<00:10, 49.64it/s]

Sample: 49%|████▉ | 491/1000 [00:10<00:10, 49.62it/s]

Sample: 50%|████▉ | 497/1000 [00:10<00:10, 49.71it/s]

Sample: 50%|█████ | 502/1000 [00:10<00:10, 49.75it/s]

Sample: 51%|█████ | 508/1000 [00:10<00:09, 49.93it/s]

Sample: 51%|█████▏ | 513/1000 [00:10<00:09, 49.95it/s]

Sample: 52%|█████▏ | 518/1000 [00:10<00:09, 49.96it/s]

Sample: 52%|█████▏ | 523/1000 [00:10<00:09, 49.96it/s]

Sample: 53%|█████▎ | 529/1000 [00:10<00:09, 50.11it/s]

Sample: 54%|█████▎ | 535/1000 [00:10<00:09, 50.16it/s]

Sample: 54%|█████▍ | 541/1000 [00:11<00:09, 49.98it/s]

Sample: 55%|█████▍ | 546/1000 [00:11<00:09, 49.73it/s]

Sample: 55%|█████▌ | 551/1000 [00:11<00:09, 49.58it/s]

Sample: 56%|█████▌ | 556/1000 [00:11<00:08, 49.60it/s]

Sample: 56%|█████▌ | 561/1000 [00:11<00:08, 49.48it/s]

Sample: 57%|█████▋ | 566/1000 [00:11<00:08, 49.36it/s]

Sample: 57%|█████▋ | 571/1000 [00:11<00:08, 49.52it/s]

Sample: 58%|█████▊ | 576/1000 [00:11<00:08, 49.51it/s]

Sample: 58%|█████▊ | 581/1000 [00:11<00:08, 49.60it/s]

Sample: 59%|█████▊ | 586/1000 [00:11<00:08, 49.25it/s]

Sample: 59%|█████▉ | 591/1000 [00:12<00:08, 49.36it/s]

Sample: 60%|█████▉ | 596/1000 [00:12<00:08, 49.31it/s]

Sample: 60%|██████ | 601/1000 [00:12<00:08, 49.25it/s]

Sample: 61%|██████ | 607/1000 [00:12<00:07, 49.53it/s]

Sample: 61%|██████ | 612/1000 [00:12<00:07, 49.40it/s]

Sample: 62%|██████▏ | 617/1000 [00:12<00:07, 49.25it/s]

Sample: 62%|██████▏ | 622/1000 [00:12<00:07, 49.28it/s]

Sample: 63%|██████▎ | 627/1000 [00:12<00:07, 49.47it/s]

Sample: 63%|██████▎ | 632/1000 [00:12<00:07, 49.52it/s]

Sample: 64%|██████▎ | 637/1000 [00:13<00:07, 48.96it/s]

Sample: 64%|██████▍ | 642/1000 [00:13<00:07, 49.09it/s]

Sample: 65%|██████▍ | 647/1000 [00:13<00:07, 49.29it/s]

Sample: 65%|██████▌ | 653/1000 [00:13<00:06, 49.63it/s]

Sample: 66%|██████▌ | 658/1000 [00:13<00:06, 49.64it/s]

Sample: 66%|██████▋ | 663/1000 [00:13<00:06, 49.71it/s]

Sample: 67%|██████▋ | 669/1000 [00:13<00:06, 49.85it/s]

Sample: 67%|██████▋ | 674/1000 [00:13<00:06, 49.69it/s]

Sample: 68%|██████▊ | 679/1000 [00:13<00:06, 49.68it/s]

Sample: 68%|██████▊ | 684/1000 [00:13<00:06, 49.69it/s]

Sample: 69%|██████▉ | 689/1000 [00:14<00:06, 49.71it/s]

Sample: 69%|██████▉ | 694/1000 [00:14<00:06, 49.70it/s]

Sample: 70%|██████▉ | 699/1000 [00:14<00:06, 49.75it/s]

Sample: 70%|███████ | 704/1000 [00:14<00:05, 49.75it/s]

Sample: 71%|███████ | 710/1000 [00:14<00:05, 49.95it/s]

Sample: 72%|███████▏ | 715/1000 [00:14<00:05, 49.78it/s]

Sample: 72%|███████▏ | 720/1000 [00:14<00:05, 49.69it/s]

Sample: 73%|███████▎ | 726/1000 [00:14<00:05, 49.88it/s]

Sample: 73%|███████▎ | 731/1000 [00:14<00:05, 49.79it/s]

Sample: 74%|███████▎ | 736/1000 [00:14<00:05, 49.58it/s]

Sample: 74%|███████▍ | 742/1000 [00:15<00:05, 49.81it/s]

Sample: 75%|███████▍ | 747/1000 [00:15<00:05, 49.85it/s]

Sample: 75%|███████▌ | 753/1000 [00:15<00:04, 49.98it/s]

Sample: 76%|███████▌ | 758/1000 [00:15<00:04, 49.88it/s]

Sample: 76%|███████▋ | 763/1000 [00:15<00:04, 49.81it/s]

Sample: 77%|███████▋ | 768/1000 [00:15<00:04, 49.81it/s]

Sample: 77%|███████▋ | 773/1000 [00:15<00:04, 49.82it/s]

Sample: 78%|███████▊ | 778/1000 [00:15<00:04, 49.87it/s]

Sample: 78%|███████▊ | 783/1000 [00:15<00:04, 49.88it/s]

Sample: 79%|███████▉ | 788/1000 [00:16<00:04, 49.80it/s]

Sample: 79%|███████▉ | 793/1000 [00:16<00:04, 49.71it/s]

Sample: 80%|███████▉ | 798/1000 [00:16<00:04, 49.64it/s]

Sample: 80%|████████ | 803/1000 [00:16<00:03, 49.53it/s]

Sample: 81%|████████ | 808/1000 [00:16<00:03, 49.45it/s]

Sample: 81%|████████▏ | 813/1000 [00:16<00:03, 49.09it/s]

Sample: 82%|████████▏ | 818/1000 [00:16<00:03, 48.70it/s]

Sample: 82%|████████▏ | 823/1000 [00:16<00:03, 48.68it/s]

Sample: 83%|████████▎ | 828/1000 [00:16<00:03, 48.83it/s]

Sample: 83%|████████▎ | 833/1000 [00:16<00:03, 48.98it/s]

Sample: 84%|████████▍ | 838/1000 [00:17<00:03, 49.10it/s]

Sample: 84%|████████▍ | 843/1000 [00:17<00:03, 49.17it/s]

Sample: 85%|████████▍ | 848/1000 [00:17<00:03, 49.40it/s]

Sample: 85%|████████▌ | 853/1000 [00:17<00:02, 49.38it/s]

Sample: 86%|████████▌ | 858/1000 [00:17<00:02, 49.51it/s]

Sample: 86%|████████▋ | 863/1000 [00:17<00:02, 49.39it/s]

Sample: 87%|████████▋ | 868/1000 [00:17<00:02, 49.30it/s]

Sample: 87%|████████▋ | 873/1000 [00:17<00:02, 49.50it/s]

Sample: 88%|████████▊ | 878/1000 [00:17<00:02, 49.60it/s]

Sample: 88%|████████▊ | 883/1000 [00:17<00:02, 49.25it/s]

Sample: 89%|████████▉ | 888/1000 [00:18<00:02, 49.04it/s]

Sample: 89%|████████▉ | 893/1000 [00:18<00:02, 48.92it/s]

Sample: 90%|████████▉ | 898/1000 [00:18<00:02, 48.99it/s]

Sample: 90%|█████████ | 903/1000 [00:18<00:01, 49.12it/s]

Sample: 91%|█████████ | 908/1000 [00:18<00:01, 49.30it/s]

Sample: 91%|█████████▏| 913/1000 [00:18<00:01, 48.91it/s]

Sample: 92%|█████████▏| 918/1000 [00:18<00:01, 48.78it/s]

Sample: 92%|█████████▏| 923/1000 [00:18<00:01, 49.04it/s]

Sample: 93%|█████████▎| 928/1000 [00:18<00:01, 49.26it/s]

Sample: 93%|█████████▎| 933/1000 [00:18<00:01, 49.01it/s]

Sample: 94%|█████████▍| 938/1000 [00:19<00:01, 48.83it/s]

Sample: 94%|█████████▍| 943/1000 [00:19<00:01, 48.91it/s]

Sample: 95%|█████████▍| 948/1000 [00:19<00:01, 49.14it/s]

Sample: 95%|█████████▌| 953/1000 [00:19<00:00, 49.23it/s]

Sample: 96%|█████████▌| 958/1000 [00:19<00:00, 49.32it/s]

Sample: 96%|█████████▋| 963/1000 [00:19<00:00, 48.99it/s]

Sample: 97%|█████████▋| 968/1000 [00:19<00:00, 48.89it/s]

Sample: 97%|█████████▋| 973/1000 [00:19<00:00, 48.87it/s]

Sample: 98%|█████████▊| 978/1000 [00:19<00:00, 48.98it/s]

Sample: 98%|█████████▊| 983/1000 [00:20<00:00, 48.73it/s]

Sample: 99%|█████████▉| 988/1000 [00:20<00:00, 48.86it/s]

Sample: 99%|█████████▉| 993/1000 [00:20<00:00, 48.91it/s]

Sample: 100%|█████████▉| 998/1000 [00:20<00:00, 48.99it/s]

Sample: 100%|██████████| 1000/1000 [00:20<00:00, 49.11it/s]

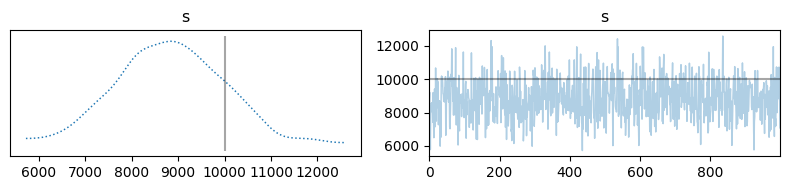

3.2 Analyze results#

Again we can inspect the results. Here we notice the posterior distribution matches the exact solution better than before.

# Plot credible intervals for the signal

samples_Ld['x'].plot_ci(exact=probinfo.exactSolution) # Issue here?

[<matplotlib.lines.Line2D at 0x7f39bc1e0400>,

<matplotlib.lines.Line2D at 0x7f39bc1e0610>,

<matplotlib.collections.PolyCollection at 0x7f39bc3b7f40>]

samples_Ld['d'].plot_trace(figsize=(8,2))

array([[<Axes: title={'center': 'd'}>, <Axes: title={'center': 'd'}>]],

dtype=object)

samples_Ld['s'].plot_trace(figsize=(8,2), exact=1/0.01**2)

array([[<Axes: title={'center': 's'}>, <Axes: title={'center': 's'}>]],

dtype=object)

We can also view some posterior samples.

samples_Ld['x'].plot()

Plotting 5 randomly selected samples

[<matplotlib.lines.Line2D at 0x7f39b4f09330>,

<matplotlib.lines.Line2D at 0x7f39b4f09420>,

<matplotlib.lines.Line2D at 0x7f39b4f09510>,

<matplotlib.lines.Line2D at 0x7f39b4f09600>,

<matplotlib.lines.Line2D at 0x7f39b4f096f0>]

4. Connection to BayesianProblem class #

Finally, let us connect back the non-expert interface through the BayesianProblem class.

Instead of explicitly defining the Gibbs sampler, we can also (for supported cases) simply provide the distributions for each of the parameters and the observed data to the BayesianProblem class. The class will then automatically construct a Gibbs sampler for the problem. This is done as follows:

# Provide distributions for parameters and set data

BP = BayesianProblem(y,x,d,s).set_data(y=y_data)

# DO UQ and compare with exact solution and noise precision

samples_BP = BP.UQ(exact={"x":probinfo.exactSolution, "s":1/0.01**2}, experimental=True)

Computing 1000 samples

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!! Automatic sampler selection is a work-in-progress. !!!

!!! Always validate the computed results. !!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!! Using samplers from cuqi.experimental.mcmc !!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Using cuqi.experimental.mcmc HybridGibbs sampler

burn-in: 20%

Automatically determined sampling strategy:

x: UGLA (mcmc.experimental)

d: ConjugateApprox (mcmc.experimental)

s: Conjugate (mcmc.experimental)

Warmup: 0%| | 0/200 [00:00<?, ?it/s]

Warmup: 2%|▎ | 5/200 [00:00<00:03, 49.38it/s]

Warmup: 5%|▌ | 10/200 [00:00<00:03, 49.44it/s]

Warmup: 8%|▊ | 15/200 [00:00<00:03, 49.55it/s]

Warmup: 10%|█ | 21/200 [00:00<00:03, 49.94it/s]

Warmup: 14%|█▎ | 27/200 [00:00<00:03, 50.08it/s]

Warmup: 16%|█▋ | 33/200 [00:00<00:03, 49.63it/s]

Warmup: 19%|█▉ | 38/200 [00:00<00:03, 49.27it/s]

Warmup: 22%|██▏ | 43/200 [00:00<00:03, 49.45it/s]

Warmup: 24%|██▍ | 48/200 [00:00<00:03, 49.42it/s]

Warmup: 26%|██▋ | 53/200 [00:01<00:02, 49.52it/s]

Warmup: 29%|██▉ | 58/200 [00:01<00:02, 49.58it/s]

Warmup: 32%|███▏ | 63/200 [00:01<00:02, 49.63it/s]

Warmup: 34%|███▍ | 68/200 [00:01<00:02, 49.73it/s]

Warmup: 37%|███▋ | 74/200 [00:01<00:02, 49.94it/s]

Warmup: 40%|███▉ | 79/200 [00:01<00:02, 49.33it/s]

Warmup: 42%|████▏ | 84/200 [00:01<00:02, 49.44it/s]

Warmup: 45%|████▌ | 90/200 [00:01<00:02, 49.73it/s]

Warmup: 48%|████▊ | 95/200 [00:01<00:02, 49.60it/s]

Warmup: 50%|█████ | 100/200 [00:02<00:02, 49.21it/s]

Warmup: 52%|█████▎ | 105/200 [00:02<00:01, 49.26it/s]

Warmup: 55%|█████▌ | 110/200 [00:02<00:01, 49.41it/s]

Warmup: 57%|█████▊ | 115/200 [00:02<00:01, 49.50it/s]

Warmup: 60%|██████ | 120/200 [00:02<00:01, 49.60it/s]

Warmup: 62%|██████▎ | 125/200 [00:02<00:01, 49.60it/s]

Warmup: 66%|██████▌ | 131/200 [00:02<00:01, 49.73it/s]

Warmup: 68%|██████▊ | 136/200 [00:02<00:01, 49.71it/s]

Warmup: 70%|███████ | 141/200 [00:02<00:01, 49.55it/s]

Warmup: 73%|███████▎ | 146/200 [00:02<00:01, 49.57it/s]

Warmup: 76%|███████▌ | 152/200 [00:03<00:00, 49.96it/s]

Warmup: 78%|███████▊ | 157/200 [00:03<00:00, 49.88it/s]

Warmup: 81%|████████ | 162/200 [00:03<00:00, 49.74it/s]

Warmup: 84%|████████▎ | 167/200 [00:03<00:00, 49.81it/s]

Warmup: 86%|████████▌ | 172/200 [00:03<00:00, 49.84it/s]

Warmup: 88%|████████▊ | 177/200 [00:03<00:00, 49.81it/s]

Warmup: 91%|█████████ | 182/200 [00:03<00:00, 49.60it/s]

Warmup: 94%|█████████▎| 187/200 [00:03<00:00, 49.64it/s]

Warmup: 96%|█████████▌| 192/200 [00:03<00:00, 49.70it/s]

Warmup: 98%|█████████▊| 197/200 [00:03<00:00, 49.63it/s]

Warmup: 100%|██████████| 200/200 [00:04<00:00, 49.59it/s]

Sample: 0%| | 0/1000 [00:00<?, ?it/s]

Sample: 1%| | 6/1000 [00:00<00:19, 50.75it/s]

Sample: 1%| | 12/1000 [00:00<00:19, 50.02it/s]

Sample: 2%|▏ | 18/1000 [00:00<00:19, 50.24it/s]

Sample: 2%|▏ | 24/1000 [00:00<00:19, 50.27it/s]

Sample: 3%|▎ | 30/1000 [00:00<00:19, 50.28it/s]

Sample: 4%|▎ | 36/1000 [00:00<00:19, 50.15it/s]

Sample: 4%|▍ | 42/1000 [00:00<00:19, 50.16it/s]

Sample: 5%|▍ | 48/1000 [00:00<00:18, 50.11it/s]

Sample: 5%|▌ | 54/1000 [00:01<00:18, 50.09it/s]

Sample: 6%|▌ | 60/1000 [00:01<00:18, 49.71it/s]

Sample: 6%|▋ | 65/1000 [00:01<00:18, 49.51it/s]

Sample: 7%|▋ | 70/1000 [00:01<00:18, 49.48it/s]

Sample: 8%|▊ | 75/1000 [00:01<00:18, 49.47it/s]

Sample: 8%|▊ | 80/1000 [00:01<00:18, 49.41it/s]

Sample: 9%|▊ | 86/1000 [00:01<00:18, 49.78it/s]

Sample: 9%|▉ | 91/1000 [00:01<00:18, 49.83it/s]

Sample: 10%|▉ | 96/1000 [00:01<00:18, 49.87it/s]

Sample: 10%|█ | 102/1000 [00:02<00:17, 49.98it/s]

Sample: 11%|█ | 107/1000 [00:02<00:17, 49.94it/s]

Sample: 11%|█ | 112/1000 [00:02<00:17, 49.72it/s]

Sample: 12%|█▏ | 117/1000 [00:02<00:17, 49.35it/s]

Sample: 12%|█▏ | 122/1000 [00:02<00:17, 49.24it/s]

Sample: 13%|█▎ | 128/1000 [00:02<00:17, 49.63it/s]

Sample: 13%|█▎ | 134/1000 [00:02<00:17, 49.92it/s]

Sample: 14%|█▍ | 139/1000 [00:02<00:17, 49.78it/s]

Sample: 14%|█▍ | 145/1000 [00:02<00:17, 50.00it/s]

Sample: 15%|█▌ | 151/1000 [00:03<00:16, 50.18it/s]

Sample: 16%|█▌ | 157/1000 [00:03<00:16, 50.16it/s]

Sample: 16%|█▋ | 163/1000 [00:03<00:16, 49.75it/s]

Sample: 17%|█▋ | 168/1000 [00:03<00:16, 49.48it/s]

Sample: 17%|█▋ | 174/1000 [00:03<00:16, 49.72it/s]

Sample: 18%|█▊ | 179/1000 [00:03<00:16, 49.61it/s]

Sample: 18%|█▊ | 184/1000 [00:03<00:16, 49.68it/s]

Sample: 19%|█▉ | 190/1000 [00:03<00:16, 49.87it/s]

Sample: 20%|█▉ | 196/1000 [00:03<00:16, 49.98it/s]

Sample: 20%|██ | 201/1000 [00:04<00:16, 49.68it/s]

Sample: 21%|██ | 206/1000 [00:04<00:15, 49.77it/s]

Sample: 21%|██ | 212/1000 [00:04<00:15, 49.97it/s]

Sample: 22%|██▏ | 217/1000 [00:04<00:15, 49.96it/s]

Sample: 22%|██▏ | 222/1000 [00:04<00:15, 49.91it/s]

Sample: 23%|██▎ | 227/1000 [00:04<00:15, 49.78it/s]

Sample: 23%|██▎ | 232/1000 [00:04<00:15, 49.83it/s]

Sample: 24%|██▎ | 237/1000 [00:04<00:15, 49.62it/s]

Sample: 24%|██▍ | 242/1000 [00:04<00:15, 49.50it/s]

Sample: 25%|██▍ | 247/1000 [00:04<00:15, 49.30it/s]

Sample: 25%|██▌ | 252/1000 [00:05<00:15, 49.21it/s]

Sample: 26%|██▌ | 257/1000 [00:05<00:15, 49.22it/s]

Sample: 26%|██▌ | 262/1000 [00:05<00:14, 49.24it/s]

Sample: 27%|██▋ | 268/1000 [00:05<00:14, 49.58it/s]

Sample: 27%|██▋ | 273/1000 [00:05<00:14, 49.69it/s]

Sample: 28%|██▊ | 278/1000 [00:05<00:14, 49.49it/s]

Sample: 28%|██▊ | 284/1000 [00:05<00:14, 49.78it/s]

Sample: 29%|██▉ | 289/1000 [00:05<00:14, 49.51it/s]

Sample: 29%|██▉ | 294/1000 [00:05<00:14, 49.40it/s]

Sample: 30%|██▉ | 299/1000 [00:06<00:14, 49.40it/s]

Sample: 30%|███ | 304/1000 [00:06<00:14, 49.20it/s]

Sample: 31%|███ | 309/1000 [00:06<00:14, 49.31it/s]

Sample: 31%|███▏ | 314/1000 [00:06<00:13, 49.41it/s]

Sample: 32%|███▏ | 319/1000 [00:06<00:14, 48.41it/s]

Sample: 32%|███▏ | 324/1000 [00:06<00:14, 46.57it/s]

Sample: 33%|███▎ | 329/1000 [00:06<00:14, 47.09it/s]

Sample: 33%|███▎ | 334/1000 [00:06<00:13, 47.61it/s]

Sample: 34%|███▍ | 339/1000 [00:06<00:13, 47.89it/s]

Sample: 34%|███▍ | 344/1000 [00:06<00:13, 48.09it/s]

Sample: 35%|███▍ | 349/1000 [00:07<00:13, 48.12it/s]

Sample: 35%|███▌ | 354/1000 [00:07<00:13, 48.56it/s]

Sample: 36%|███▌ | 360/1000 [00:07<00:13, 49.15it/s]

Sample: 37%|███▋ | 366/1000 [00:07<00:12, 49.51it/s]

Sample: 37%|███▋ | 372/1000 [00:07<00:12, 50.04it/s]

Sample: 38%|███▊ | 377/1000 [00:07<00:12, 49.99it/s]

Sample: 38%|███▊ | 383/1000 [00:07<00:12, 50.13it/s]

Sample: 39%|███▉ | 389/1000 [00:07<00:12, 50.28it/s]

Sample: 40%|███▉ | 395/1000 [00:07<00:12, 50.28it/s]

Sample: 40%|████ | 401/1000 [00:08<00:11, 50.11it/s]

Sample: 41%|████ | 407/1000 [00:08<00:11, 50.08it/s]

Sample: 41%|████▏ | 413/1000 [00:08<00:11, 50.27it/s]

Sample: 42%|████▏ | 419/1000 [00:08<00:11, 50.37it/s]

Sample: 42%|████▎ | 425/1000 [00:08<00:11, 50.47it/s]

Sample: 43%|████▎ | 431/1000 [00:08<00:11, 50.59it/s]

Sample: 44%|████▎ | 437/1000 [00:08<00:11, 50.51it/s]

Sample: 44%|████▍ | 443/1000 [00:08<00:10, 50.67it/s]

Sample: 45%|████▍ | 449/1000 [00:09<00:10, 50.53it/s]

Sample: 46%|████▌ | 455/1000 [00:09<00:13, 39.69it/s]

Sample: 46%|████▌ | 460/1000 [00:09<00:12, 41.54it/s]

Sample: 46%|████▋ | 465/1000 [00:09<00:12, 43.03it/s]

Sample: 47%|████▋ | 470/1000 [00:09<00:11, 44.39it/s]

Sample: 48%|████▊ | 476/1000 [00:09<00:11, 46.18it/s]

Sample: 48%|████▊ | 481/1000 [00:09<00:11, 47.14it/s]

Sample: 49%|████▊ | 486/1000 [00:09<00:10, 47.67it/s]

Sample: 49%|████▉ | 491/1000 [00:10<00:10, 48.04it/s]

Sample: 50%|████▉ | 496/1000 [00:10<00:10, 48.30it/s]

Sample: 50%|█████ | 502/1000 [00:10<00:10, 48.94it/s]

Sample: 51%|█████ | 507/1000 [00:10<00:10, 49.04it/s]

Sample: 51%|█████ | 512/1000 [00:10<00:09, 49.29it/s]

Sample: 52%|█████▏ | 517/1000 [00:10<00:09, 49.15it/s]

Sample: 52%|█████▏ | 522/1000 [00:10<00:09, 49.38it/s]

Sample: 53%|█████▎ | 528/1000 [00:10<00:09, 49.71it/s]

Sample: 53%|█████▎ | 534/1000 [00:10<00:09, 49.83it/s]

Sample: 54%|█████▍ | 539/1000 [00:10<00:09, 49.82it/s]

Sample: 54%|█████▍ | 544/1000 [00:11<00:09, 49.71it/s]

Sample: 55%|█████▍ | 549/1000 [00:11<00:09, 49.48it/s]

Sample: 55%|█████▌ | 554/1000 [00:11<00:09, 49.50it/s]

Sample: 56%|█████▌ | 559/1000 [00:11<00:08, 49.57it/s]

Sample: 56%|█████▋ | 564/1000 [00:11<00:08, 49.57it/s]

Sample: 57%|█████▋ | 569/1000 [00:11<00:08, 49.33it/s]

Sample: 57%|█████▋ | 574/1000 [00:11<00:08, 49.34it/s]

Sample: 58%|█████▊ | 579/1000 [00:11<00:08, 49.46it/s]

Sample: 58%|█████▊ | 585/1000 [00:11<00:08, 49.76it/s]

Sample: 59%|█████▉ | 590/1000 [00:11<00:08, 49.76it/s]

Sample: 60%|█████▉ | 595/1000 [00:12<00:08, 49.57it/s]

Sample: 60%|██████ | 600/1000 [00:12<00:08, 49.68it/s]

Sample: 61%|██████ | 606/1000 [00:12<00:07, 49.75it/s]

Sample: 61%|██████ | 611/1000 [00:12<00:07, 49.45it/s]

Sample: 62%|██████▏ | 617/1000 [00:12<00:07, 49.83it/s]

Sample: 62%|██████▏ | 622/1000 [00:12<00:07, 49.81it/s]

Sample: 63%|██████▎ | 628/1000 [00:12<00:07, 49.95it/s]

Sample: 63%|██████▎ | 634/1000 [00:12<00:07, 50.05it/s]

Sample: 64%|██████▍ | 640/1000 [00:13<00:07, 49.77it/s]

Sample: 64%|██████▍ | 645/1000 [00:13<00:07, 49.49it/s]

Sample: 65%|██████▌ | 651/1000 [00:13<00:07, 49.80it/s]

Sample: 66%|██████▌ | 657/1000 [00:13<00:06, 49.94it/s]

Sample: 66%|██████▋ | 663/1000 [00:13<00:06, 50.01it/s]

Sample: 67%|██████▋ | 668/1000 [00:13<00:06, 49.78it/s]

Sample: 67%|██████▋ | 673/1000 [00:13<00:06, 49.79it/s]

Sample: 68%|██████▊ | 679/1000 [00:13<00:06, 49.97it/s]

Sample: 68%|██████▊ | 684/1000 [00:13<00:06, 49.91it/s]

Sample: 69%|██████▉ | 689/1000 [00:13<00:06, 49.81it/s]

Sample: 69%|██████▉ | 694/1000 [00:14<00:06, 49.62it/s]

Sample: 70%|██████▉ | 699/1000 [00:14<00:06, 49.18it/s]

Sample: 70%|███████ | 704/1000 [00:14<00:05, 49.37it/s]

Sample: 71%|███████ | 709/1000 [00:14<00:05, 49.28it/s]

Sample: 71%|███████▏ | 714/1000 [00:14<00:05, 49.13it/s]

Sample: 72%|███████▏ | 719/1000 [00:14<00:05, 49.35it/s]

Sample: 72%|███████▏ | 724/1000 [00:14<00:05, 49.46it/s]

Sample: 73%|███████▎ | 729/1000 [00:14<00:05, 49.31it/s]

Sample: 74%|███████▎ | 735/1000 [00:14<00:05, 49.53it/s]

Sample: 74%|███████▍ | 741/1000 [00:15<00:05, 49.75it/s]

Sample: 75%|███████▍ | 746/1000 [00:15<00:05, 49.68it/s]

Sample: 75%|███████▌ | 752/1000 [00:15<00:04, 49.78it/s]

Sample: 76%|███████▌ | 758/1000 [00:15<00:04, 50.05it/s]

Sample: 76%|███████▋ | 764/1000 [00:15<00:04, 50.06it/s]

Sample: 77%|███████▋ | 770/1000 [00:15<00:04, 50.00it/s]

Sample: 78%|███████▊ | 775/1000 [00:15<00:04, 49.96it/s]

Sample: 78%|███████▊ | 780/1000 [00:15<00:04, 49.83it/s]

Sample: 78%|███████▊ | 785/1000 [00:15<00:04, 49.46it/s]

Sample: 79%|███████▉ | 790/1000 [00:16<00:04, 49.39it/s]

Sample: 80%|███████▉ | 795/1000 [00:16<00:04, 49.52it/s]

Sample: 80%|████████ | 800/1000 [00:16<00:04, 49.49it/s]

Sample: 81%|████████ | 806/1000 [00:16<00:03, 49.71it/s]

Sample: 81%|████████ | 811/1000 [00:16<00:03, 49.68it/s]

Sample: 82%|████████▏ | 816/1000 [00:16<00:03, 49.73it/s]

Sample: 82%|████████▏ | 822/1000 [00:16<00:03, 49.89it/s]

Sample: 83%|████████▎ | 827/1000 [00:16<00:03, 49.72it/s]

Sample: 83%|████████▎ | 832/1000 [00:16<00:03, 49.67it/s]

Sample: 84%|████████▍ | 838/1000 [00:16<00:03, 49.86it/s]

Sample: 84%|████████▍ | 844/1000 [00:17<00:03, 50.08it/s]

Sample: 85%|████████▌ | 850/1000 [00:17<00:02, 50.04it/s]

Sample: 86%|████████▌ | 856/1000 [00:17<00:02, 50.11it/s]

Sample: 86%|████████▌ | 862/1000 [00:17<00:02, 49.80it/s]

Sample: 87%|████████▋ | 867/1000 [00:17<00:02, 49.57it/s]

Sample: 87%|████████▋ | 872/1000 [00:17<00:02, 49.57it/s]

Sample: 88%|████████▊ | 878/1000 [00:17<00:02, 49.92it/s]

Sample: 88%|████████▊ | 884/1000 [00:17<00:02, 50.02it/s]

Sample: 89%|████████▉ | 889/1000 [00:18<00:02, 49.89it/s]

Sample: 90%|████████▉ | 895/1000 [00:18<00:02, 50.00it/s]

Sample: 90%|█████████ | 900/1000 [00:18<00:02, 49.92it/s]

Sample: 91%|█████████ | 906/1000 [00:18<00:01, 50.07it/s]

Sample: 91%|█████████ | 912/1000 [00:18<00:01, 50.03it/s]

Sample: 92%|█████████▏| 918/1000 [00:18<00:01, 49.99it/s]

Sample: 92%|█████████▏| 923/1000 [00:18<00:01, 49.57it/s]

Sample: 93%|█████████▎| 928/1000 [00:18<00:01, 49.59it/s]

Sample: 93%|█████████▎| 934/1000 [00:18<00:01, 49.77it/s]

Sample: 94%|█████████▍| 939/1000 [00:19<00:01, 49.82it/s]

Sample: 94%|█████████▍| 944/1000 [00:19<00:01, 49.77it/s]

Sample: 95%|█████████▍| 949/1000 [00:19<00:01, 49.41it/s]

Sample: 95%|█████████▌| 954/1000 [00:19<00:00, 49.57it/s]

Sample: 96%|█████████▌| 960/1000 [00:19<00:00, 49.76it/s]

Sample: 96%|█████████▋| 965/1000 [00:19<00:00, 49.68it/s]

Sample: 97%|█████████▋| 971/1000 [00:19<00:00, 49.96it/s]

Sample: 98%|█████████▊| 977/1000 [00:19<00:00, 50.09it/s]

Sample: 98%|█████████▊| 983/1000 [00:19<00:00, 49.96it/s]

Sample: 99%|█████████▉| 988/1000 [00:19<00:00, 49.90it/s]

Sample: 99%|█████████▉| 994/1000 [00:20<00:00, 49.83it/s]

Sample: 100%|█████████▉| 999/1000 [00:20<00:00, 49.82it/s]

Sample: 100%|██████████| 1000/1000 [00:20<00:00, 49.41it/s]

Elapsed time: 24.28712224960327

Plotting results

★ Try yourself (optional):#

Try building your own Bayesian problem and sampling from it using the BayesianProblem class. You can use the code from the previous sections as a starting point. If the automatic sampler selection fails, you can also try to defining the Gibbs sampler manually.

You can also try the same Bayesian problem but for 2D deconvolution

# Your code here

★ Exercise #

Numerical verification of the conjugacy of a Gaussian likelihood a Gaussian prior. Consider the following simple BIP:

# Prior

cov1 = np.array([[1, 0.5], [0.5, 1]])

x1 = Gaussian(np.zeros(2), cov1)

I = np.eye(2)

cov_obs = 0.1*I

# Forward model and likelihood

A = LinearModel(forward=I)

x2 = Gaussian(A@x1, cov_obs)

# Set the BIP

BP2 = BayesianProblem(x1, x2)

data = np.array([0.1, 0.2])

BP2.set_data(x2=data)

# Solve the BIP

samples_post = BP2.sample_posterior(10000, experimental=True)

samples_post.plot_pair()

Create a CUQIpy Gaussian distribution x3 that is equivalent to the posterior distribution of this BIP. Hint: recall conjugacy of Gaussian likelihood and Gaussian prior. Sample x3 and use the plot_pair method to visualize the samples. Compare the results with the samples from the BayesianProblem class.

# Your code here