Note

Go to the end to download the full example code.

Multiple input model: bathtub demo#

This is a demo for bathtub water temperature and volume model using CUQIpy. We have measurements of the temperature and volume of the water in the bathtub and want to infer the temperature and volume of the hot water and the cold water that were used to fill in the bathtub

Import libraries#

import cuqi

import numpy as np

Define the forward map#

h_v is the volume of hot water, h_t is the temperature of hot water, c_v is the volume of cold water, and c_t is the temperature of cold water.

def forward_map(h_v, h_t, c_v, c_t):

# volume

volume = h_v + c_v

# temperature

temp = (h_v * h_t + c_v * c_t) / (h_v + c_v)

return np.array([volume, temp]).reshape(2,)

Define gradient functions with respect to the unknown parameters#

# Define the gradient with respect to h_v

def gradient_h_v(direction, h_v, h_t, c_v, c_t):

return (

direction[0]

+ (h_t / (h_v + c_v) - (h_v * h_t + c_v * c_t) / (h_v + c_v) ** 2)

* direction[1]

)

# Define the gradient with respect to h_t

def gradient_h_t(direction, h_v, h_t, c_v, c_t):

return (h_v / (h_v + c_v)) * direction[1]

# Define the gradient with respect to c_v

def gradient_c_v(direction, h_v, h_t, c_v, c_t):

return (

direction[0]

+ (c_t / (h_v + c_v) - (h_v * h_t + c_v * c_t) / (h_v + c_v) ** 2)

* direction[1]

)

# Define the gradient with respect to c_t

def gradient_c_t(direction, h_v, h_t, c_v, c_t):

return (c_v / (h_v + c_v)) * direction[1]

Define domain geometry and range geometry#

domain_geometry = (

cuqi.geometry.Discrete(['h_v']),

cuqi.geometry.Discrete(['h_t']),

cuqi.geometry.Discrete(['c_v']),

cuqi.geometry.Discrete(['c_t'])

)

range_geometry = cuqi.geometry.Discrete(['temperature','volume'])

Define the forward model object#

model = cuqi.model.Model(

forward=forward_map,

gradient=(gradient_h_v, gradient_h_t, gradient_c_v, gradient_c_t),

domain_geometry=domain_geometry,

range_geometry=range_geometry

)

Experiment with partial evaluation of the model#

print("\nmodel()\n", model())

print("\nmodel(h_v = 50)\n", model(h_v=50))

print("\nmodel(h_v = 50, h_t = 60)\n", model(h_v=50, h_t=60))

print("\nmodel(h_v = 50, h_t = 60, c_v = 30)\n", model(h_v=50, h_t=60, c_v=30))

print(

"\nmodel(h_v = 50, h_t = 60, c_v = 30, c_t = 10)\n",

model(h_v=50, h_t=60, c_v=30, c_t=10),

)

model()

CUQI Model: _ProductGeometry(

Discrete[1]

Discrete[1]

Discrete[1]

Discrete[1]

) -> Discrete[2].

Forward parameters: ['h_v', 'h_t', 'c_v', 'c_t'].

model(h_v = 50)

CUQI Model: _ProductGeometry(

Discrete[1]

Discrete[1]

Discrete[1]

) -> Discrete[2].

Forward parameters: ['h_t', 'c_v', 'c_t'].

model(h_v = 50, h_t = 60)

CUQI Model: _ProductGeometry(

Discrete[1]

Discrete[1]

) -> Discrete[2].

Forward parameters: ['c_v', 'c_t'].

model(h_v = 50, h_t = 60, c_v = 30)

CUQI Model: Discrete[1] -> Discrete[2].

Forward parameters: ['c_t'].

model(h_v = 50, h_t = 60, c_v = 30, c_t = 10)

[80. 41.25]

Define prior distributions for the unknown parameters#

h_v_dist = cuqi.distribution.Uniform(0, 60, geometry=domain_geometry[0])

h_t_dist = cuqi.distribution.Uniform(40, 70, geometry=domain_geometry[1])

c_v_dist = cuqi.distribution.Uniform(0, 60, geometry=domain_geometry[2])

c_t_dist = cuqi.distribution.TruncatedNormal(

10, 2**2, 7, 15, geometry=domain_geometry[3]

)

Define a data distribution#

data_dist = cuqi.distribution.Gaussian(

mean=model(h_v_dist, h_t_dist, c_v_dist, c_t_dist),

cov=np.array([[1**2, 0], [0, 0.5**2]])

)

Define a joint distribution of prior and data distributions#

joint_dist = cuqi.distribution.JointDistribution(

data_dist,

h_v_dist,

h_t_dist,

c_v_dist,

c_t_dist

)

Define the posterior distribution by setting the observed data#

# Assume measured volume is 60 gallons and measured temperature is 38 degrees

# celsius

posterior = joint_dist(data_dist=np.array([60, 38]))

Experiment with conditioning the posterior distribution#

print("posterior", posterior)

print("\nposterior(h_v_dist = 50)\n", posterior(h_v_dist=50))

print("\nposterior(h_v_dist = 50, h_t_dist = 60)\n", posterior(h_v_dist=50, h_t_dist=60))

print(

"\nposterior(h_v_dist = 50, h_t_dist = 60, c_v_dist = 30)\n",

posterior(h_v_dist=50, h_t_dist=60, c_v_dist=30),

)

posterior JointDistribution(

Equation:

p(h_v_dist,h_t_dist,c_v_dist,c_t_dist|data_dist) ∝ L(h_v_dist,h_t_dist,c_t_dist,c_v_dist|data_dist)p(h_v_dist)p(h_t_dist)p(c_v_dist)p(c_t_dist)

Densities:

data_dist ~ CUQI Gaussian Likelihood function. Parameters ['h_v_dist', 'h_t_dist', 'c_v_dist', 'c_t_dist'].

h_v_dist ~ CUQI Uniform.

h_t_dist ~ CUQI Uniform.

c_v_dist ~ CUQI Uniform.

c_t_dist ~ CUQI TruncatedNormal.

)

posterior(h_v_dist = 50)

JointDistribution(

Equation:

p(h_t_dist,c_v_dist,c_t_dist|data_dist,h_v_dist) ∝ L(h_t_dist,c_t_dist,c_v_dist|data_dist)p(h_t_dist)p(c_v_dist)p(c_t_dist)

Densities:

data_dist ~ CUQI Gaussian Likelihood function. Parameters ['h_t_dist', 'c_v_dist', 'c_t_dist'].

h_v_dist ~ EvaluatedDensity(-4.0943445622221)

h_t_dist ~ CUQI Uniform.

c_v_dist ~ CUQI Uniform.

c_t_dist ~ CUQI TruncatedNormal.

)

posterior(h_v_dist = 50, h_t_dist = 60)

JointDistribution(

Equation:

p(c_v_dist,c_t_dist|data_dist,h_v_dist,h_t_dist) ∝ L(c_t_dist,c_v_dist|data_dist)p(c_v_dist)p(c_t_dist)

Densities:

data_dist ~ CUQI Gaussian Likelihood function. Parameters ['c_v_dist', 'c_t_dist'].

h_v_dist ~ EvaluatedDensity(-4.0943445622221)

h_t_dist ~ EvaluatedDensity(-3.4011973816621555)

c_v_dist ~ CUQI Uniform.

c_t_dist ~ CUQI TruncatedNormal.

)

posterior(h_v_dist = 50, h_t_dist = 60, c_v_dist = 30)

Posterior(

Equation:

p(c_t_dist|data_dist) ∝ L(c_t_dist|data_dist)p(c_t_dist)

Densities:

data_dist ~ CUQI Gaussian Likelihood function. Parameters ['c_t_dist'].

c_t_dist ~ CUQI TruncatedNormal.

)

Sample from the joint (posterior) distribution#

First define sampling strategy for Gibbs sampling

sampling_strategy = {

"h_v_dist": cuqi.sampler.MH(

scale=0.2, initial_point=np.array([30])),

"h_t_dist": cuqi.sampler.MALA(

scale=0.6, initial_point=np.array([50])),

"c_v_dist": cuqi.sampler.MALA(

scale=0.6, initial_point=np.array([30])),

"c_t_dist": cuqi.sampler.MALA(

scale=0.6, initial_point=np.array([10])),

}

Then create the sampler and sample the posterior distribution

hybridGibbs = cuqi.sampler.HybridGibbs(

posterior,

sampling_strategy=sampling_strategy)

hybridGibbs.warmup(100)

hybridGibbs.sample(2000)

samples = hybridGibbs.get_samples()

Warmup: 0%| | 0/100 [00:00<?, ?it/s]

Warmup: 21%|██ | 21/100 [00:00<00:00, 200.43it/s]

Warmup: 42%|████▏ | 42/100 [00:00<00:00, 200.43it/s]

Warmup: 63%|██████▎ | 63/100 [00:00<00:00, 199.90it/s]

Warmup: 84%|████████▍ | 84/100 [00:00<00:00, 200.07it/s]

Warmup: 100%|██████████| 100/100 [00:00<00:00, 200.15it/s]

Sample: 0%| | 0/2000 [00:00<?, ?it/s]

Sample: 1%| | 21/2000 [00:00<00:09, 199.88it/s]

Sample: 2%|▏ | 41/2000 [00:00<00:09, 199.74it/s]

Sample: 3%|▎ | 62/2000 [00:00<00:09, 201.01it/s]

Sample: 4%|▍ | 83/2000 [00:00<00:09, 201.52it/s]

Sample: 5%|▌ | 104/2000 [00:00<00:09, 201.96it/s]

Sample: 6%|▋ | 125/2000 [00:00<00:09, 201.29it/s]

Sample: 7%|▋ | 146/2000 [00:00<00:09, 201.29it/s]

Sample: 8%|▊ | 167/2000 [00:00<00:09, 200.73it/s]

Sample: 9%|▉ | 188/2000 [00:00<00:09, 201.06it/s]

Sample: 10%|█ | 209/2000 [00:01<00:08, 201.64it/s]

Sample: 12%|█▏ | 230/2000 [00:01<00:08, 200.24it/s]

Sample: 13%|█▎ | 251/2000 [00:01<00:08, 199.57it/s]

Sample: 14%|█▎ | 272/2000 [00:01<00:08, 199.90it/s]

Sample: 15%|█▍ | 293/2000 [00:01<00:08, 200.32it/s]

Sample: 16%|█▌ | 314/2000 [00:01<00:08, 200.77it/s]

Sample: 17%|█▋ | 335/2000 [00:01<00:08, 200.96it/s]

Sample: 18%|█▊ | 356/2000 [00:01<00:08, 200.94it/s]

Sample: 19%|█▉ | 377/2000 [00:01<00:08, 200.00it/s]

Sample: 20%|█▉ | 398/2000 [00:01<00:08, 200.14it/s]

Sample: 21%|██ | 419/2000 [00:02<00:07, 200.59it/s]

Sample: 22%|██▏ | 440/2000 [00:02<00:07, 200.28it/s]

Sample: 23%|██▎ | 461/2000 [00:02<00:07, 200.55it/s]

Sample: 24%|██▍ | 482/2000 [00:02<00:07, 200.14it/s]

Sample: 25%|██▌ | 503/2000 [00:02<00:07, 200.56it/s]

Sample: 26%|██▌ | 524/2000 [00:02<00:07, 200.61it/s]

Sample: 27%|██▋ | 545/2000 [00:02<00:07, 200.54it/s]

Sample: 28%|██▊ | 566/2000 [00:02<00:07, 200.04it/s]

Sample: 29%|██▉ | 587/2000 [00:02<00:07, 199.87it/s]

Sample: 30%|███ | 608/2000 [00:03<00:06, 199.97it/s]

Sample: 31%|███▏ | 628/2000 [00:03<00:06, 199.61it/s]

Sample: 32%|███▏ | 648/2000 [00:03<00:06, 198.76it/s]

Sample: 33%|███▎ | 669/2000 [00:03<00:06, 199.20it/s]

Sample: 34%|███▍ | 690/2000 [00:03<00:06, 199.88it/s]

Sample: 36%|███▌ | 711/2000 [00:03<00:06, 199.85it/s]

Sample: 37%|███▋ | 732/2000 [00:03<00:06, 200.24it/s]

Sample: 38%|███▊ | 753/2000 [00:03<00:06, 200.45it/s]

Sample: 39%|███▊ | 774/2000 [00:03<00:06, 200.07it/s]

Sample: 40%|███▉ | 795/2000 [00:03<00:06, 200.42it/s]

Sample: 41%|████ | 816/2000 [00:04<00:05, 200.50it/s]

Sample: 42%|████▏ | 837/2000 [00:04<00:05, 200.59it/s]

Sample: 43%|████▎ | 858/2000 [00:04<00:05, 201.12it/s]

Sample: 44%|████▍ | 879/2000 [00:04<00:05, 201.25it/s]

Sample: 45%|████▌ | 900/2000 [00:04<00:05, 201.39it/s]

Sample: 46%|████▌ | 921/2000 [00:04<00:05, 199.12it/s]

Sample: 47%|████▋ | 942/2000 [00:04<00:05, 199.90it/s]

Sample: 48%|████▊ | 963/2000 [00:04<00:05, 201.01it/s]

Sample: 49%|████▉ | 984/2000 [00:04<00:05, 201.81it/s]

Sample: 50%|█████ | 1005/2000 [00:05<00:04, 202.55it/s]

Sample: 51%|█████▏ | 1026/2000 [00:05<00:04, 202.83it/s]

Sample: 52%|█████▏ | 1047/2000 [00:05<00:04, 202.44it/s]

Sample: 53%|█████▎ | 1068/2000 [00:05<00:04, 202.92it/s]

Sample: 54%|█████▍ | 1089/2000 [00:05<00:04, 202.89it/s]

Sample: 56%|█████▌ | 1110/2000 [00:05<00:04, 201.00it/s]

Sample: 57%|█████▋ | 1131/2000 [00:05<00:04, 200.79it/s]

Sample: 58%|█████▊ | 1152/2000 [00:05<00:04, 200.70it/s]

Sample: 59%|█████▊ | 1173/2000 [00:05<00:04, 201.21it/s]

Sample: 60%|█████▉ | 1194/2000 [00:05<00:03, 201.66it/s]

Sample: 61%|██████ | 1215/2000 [00:06<00:03, 202.26it/s]

Sample: 62%|██████▏ | 1236/2000 [00:06<00:03, 202.05it/s]

Sample: 63%|██████▎ | 1257/2000 [00:06<00:03, 201.96it/s]

Sample: 64%|██████▍ | 1278/2000 [00:06<00:03, 202.59it/s]

Sample: 65%|██████▍ | 1299/2000 [00:06<00:03, 203.28it/s]

Sample: 66%|██████▌ | 1320/2000 [00:06<00:03, 202.61it/s]

Sample: 67%|██████▋ | 1341/2000 [00:06<00:03, 202.23it/s]

Sample: 68%|██████▊ | 1362/2000 [00:06<00:03, 201.93it/s]

Sample: 69%|██████▉ | 1383/2000 [00:06<00:03, 201.41it/s]

Sample: 70%|███████ | 1404/2000 [00:06<00:02, 201.72it/s]

Sample: 71%|███████▏ | 1425/2000 [00:07<00:02, 201.16it/s]

Sample: 72%|███████▏ | 1446/2000 [00:07<00:02, 201.21it/s]

Sample: 73%|███████▎ | 1467/2000 [00:07<00:02, 201.31it/s]

Sample: 74%|███████▍ | 1488/2000 [00:07<00:02, 200.79it/s]

Sample: 75%|███████▌ | 1509/2000 [00:07<00:02, 200.98it/s]

Sample: 76%|███████▋ | 1530/2000 [00:07<00:02, 201.11it/s]

Sample: 78%|███████▊ | 1551/2000 [00:07<00:02, 200.92it/s]

Sample: 79%|███████▊ | 1572/2000 [00:07<00:02, 200.81it/s]

Sample: 80%|███████▉ | 1593/2000 [00:07<00:02, 200.43it/s]

Sample: 81%|████████ | 1614/2000 [00:08<00:01, 200.42it/s]

Sample: 82%|████████▏ | 1635/2000 [00:08<00:01, 200.43it/s]

Sample: 83%|████████▎ | 1656/2000 [00:08<00:01, 199.97it/s]

Sample: 84%|████████▍ | 1677/2000 [00:08<00:01, 200.45it/s]

Sample: 85%|████████▍ | 1698/2000 [00:08<00:01, 201.14it/s]

Sample: 86%|████████▌ | 1719/2000 [00:08<00:01, 200.98it/s]

Sample: 87%|████████▋ | 1740/2000 [00:08<00:01, 200.82it/s]

Sample: 88%|████████▊ | 1761/2000 [00:08<00:01, 199.29it/s]

Sample: 89%|████████▉ | 1781/2000 [00:08<00:01, 199.18it/s]

Sample: 90%|█████████ | 1802/2000 [00:08<00:00, 199.80it/s]

Sample: 91%|█████████ | 1822/2000 [00:09<00:00, 198.93it/s]

Sample: 92%|█████████▏| 1842/2000 [00:09<00:00, 198.39it/s]

Sample: 93%|█████████▎| 1862/2000 [00:09<00:00, 197.89it/s]

Sample: 94%|█████████▍| 1882/2000 [00:09<00:00, 197.53it/s]

Sample: 95%|█████████▌| 1902/2000 [00:09<00:00, 197.76it/s]

Sample: 96%|█████████▌| 1922/2000 [00:09<00:00, 194.67it/s]

Sample: 97%|█████████▋| 1942/2000 [00:09<00:00, 193.70it/s]

Sample: 98%|█████████▊| 1962/2000 [00:09<00:00, 191.34it/s]

Sample: 99%|█████████▉| 1982/2000 [00:09<00:00, 186.37it/s]

Sample: 100%|██████████| 2000/2000 [00:10<00:00, 199.84it/s]

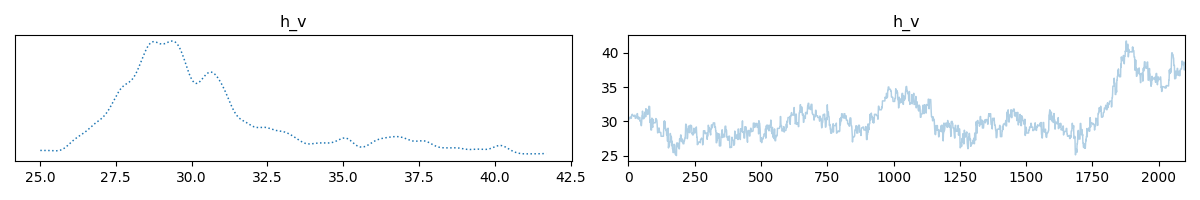

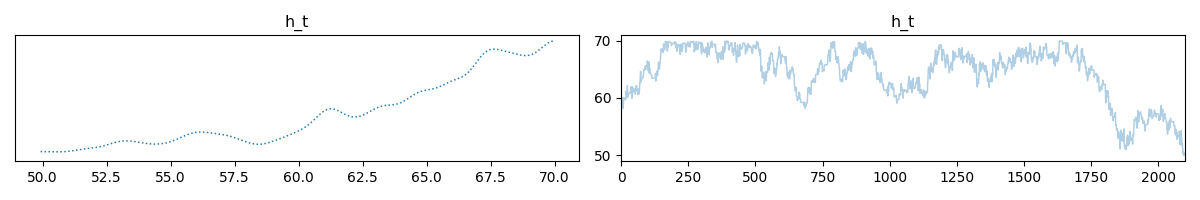

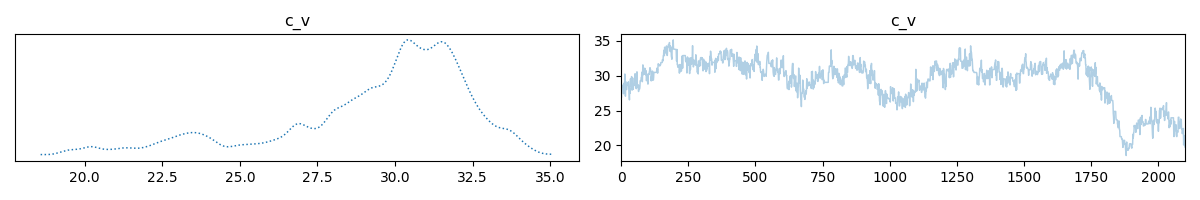

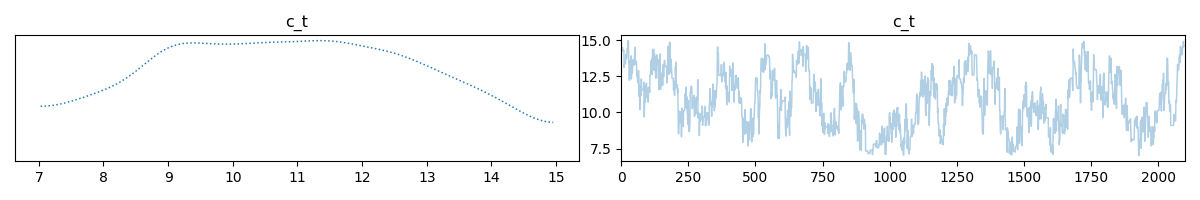

Show results (mean and trace plots)#

# Compute mean values

mean_h_v = samples['h_v_dist'].mean()

mean_h_t = samples['h_t_dist'].mean()

mean_c_v = samples['c_v_dist'].mean()

mean_c_t = samples['c_t_dist'].mean()

# Print mean values

print(f"Mean h_v: {mean_h_v}, Mean h_t: {mean_h_t}, Mean c_v: {mean_c_v}, Mean c_t: {mean_c_t}")

print("Measured volume:", 60)

print("Mean predicted volume:", mean_h_v + mean_c_v)

print()

print("Measured temperature:", 38)

print("Mean predicted temperature:", (mean_h_v * mean_h_t + mean_c_v * mean_c_t) / (mean_h_v + mean_c_v))

# Plot trace of samples

samples['h_v_dist'].plot_trace();

samples['h_t_dist'].plot_trace();

samples['c_v_dist'].plot_trace();

samples['c_t_dist'].plot_trace();

Mean h_v: [30.6012947], Mean h_t: [64.38596412], Mean c_v: [29.38593915], Mean c_t: [10.87458958]

Measured volume: 60

Mean predicted volume: [59.98723386]

Measured temperature: 38

Mean predicted temperature: [38.1723534]

array([[<Axes: title={'center': 'c_t'}>, <Axes: title={'center': 'c_t'}>]],

dtype=object)

Total running time of the script: (0 minutes 10.966 seconds)